C is for CAT 🐈

Exploring machine's ABC’s

Digging into tools for machine learning (ML), I found that there are some publicly available datasets that are commonly used for training ML tools that surround us.

This is fascinating and scary.

Those datasets contain weird stuff... and those are the building blocks of the tech that takes decisions for and on us.

One of those popular datasets is COCO (common objects in context). (build with using people’s Flickr photos, with their legal consent, but probably without their knowledge)

By curiosity, I started digging the 42,7 GB of images from the COCO dataset, where you can find 330K images containing over 1.8 million objects within 80 object categories and 91 stuff categories. 😱

-> here some wonders found inside COCO-VAL2017 dataset : 🤦♂️

*I personally do not find myself identified with the look & feel of the COCO dataset... my food does not look like that, my living room does not look like that, my hometown dos not look like that... and so on...

👉 explore the COCO dataset here

COCO Categories: person / bicycle / car / motorcycle / airplane / bus / train / truck / boat / traffic light / fire hydrant / street sign / stop sign / parking meter / bench / bird / cat / dog / horse / sheep / cow / elephant / bear / zebra / giraffe / hat / backpack / umbrella / shoe / eye glasses / handbag / tie / suitcase / frisbee / skis / snowboard / sports ball / kite / baseball bat / baseball glove / skateboard / surfboard / tennis racket / bottle / plate / wine glass / cup / fork / knife / spoon / bowl / banana / apple / sandwich / orange / broccoli / carrot / hot dog / pizza / donut / cake / chair / couch / potted plant / bed / mirror / dining table / window / desk / toilet / door / tv / laptop / mouse / remote / keyboard / cell phone / microwave / oven / toaster / sink / refrigerator / blender / book / clock / vase / scissors / teddy bear / hair drier / toothbrush / hair brush

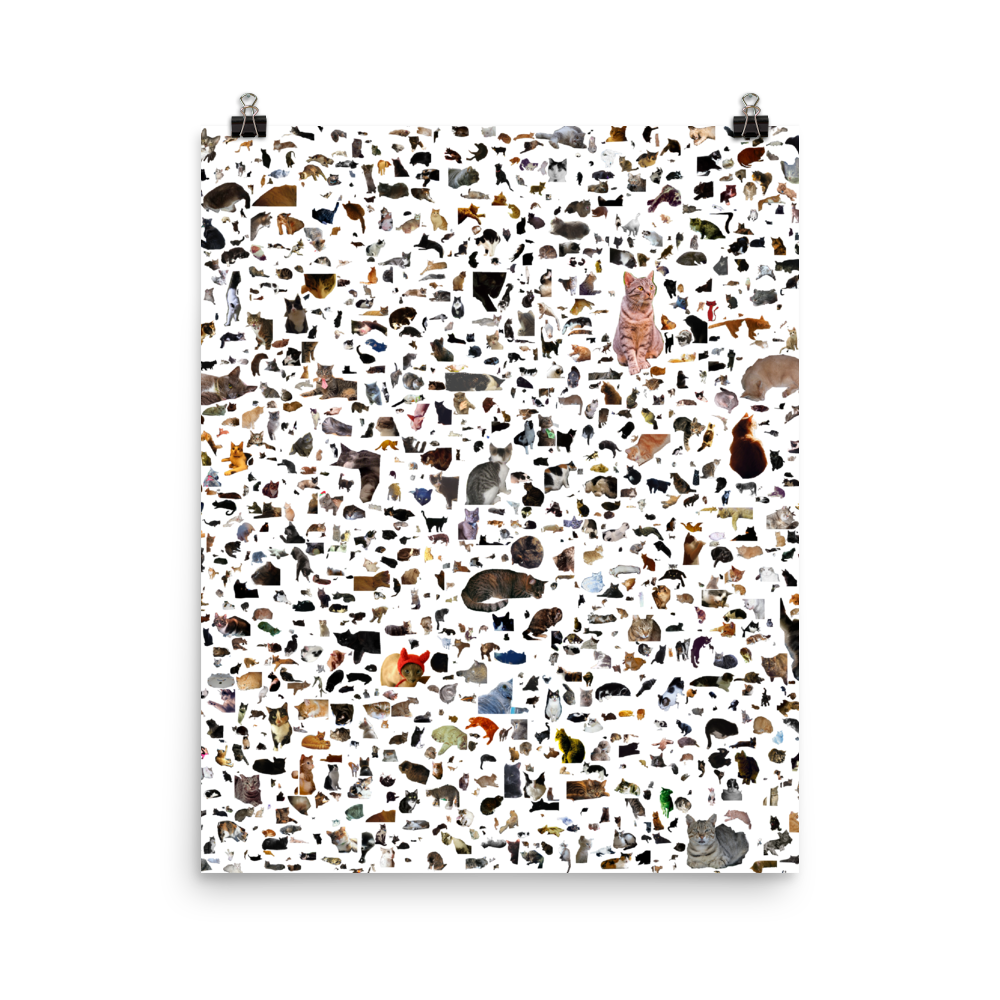

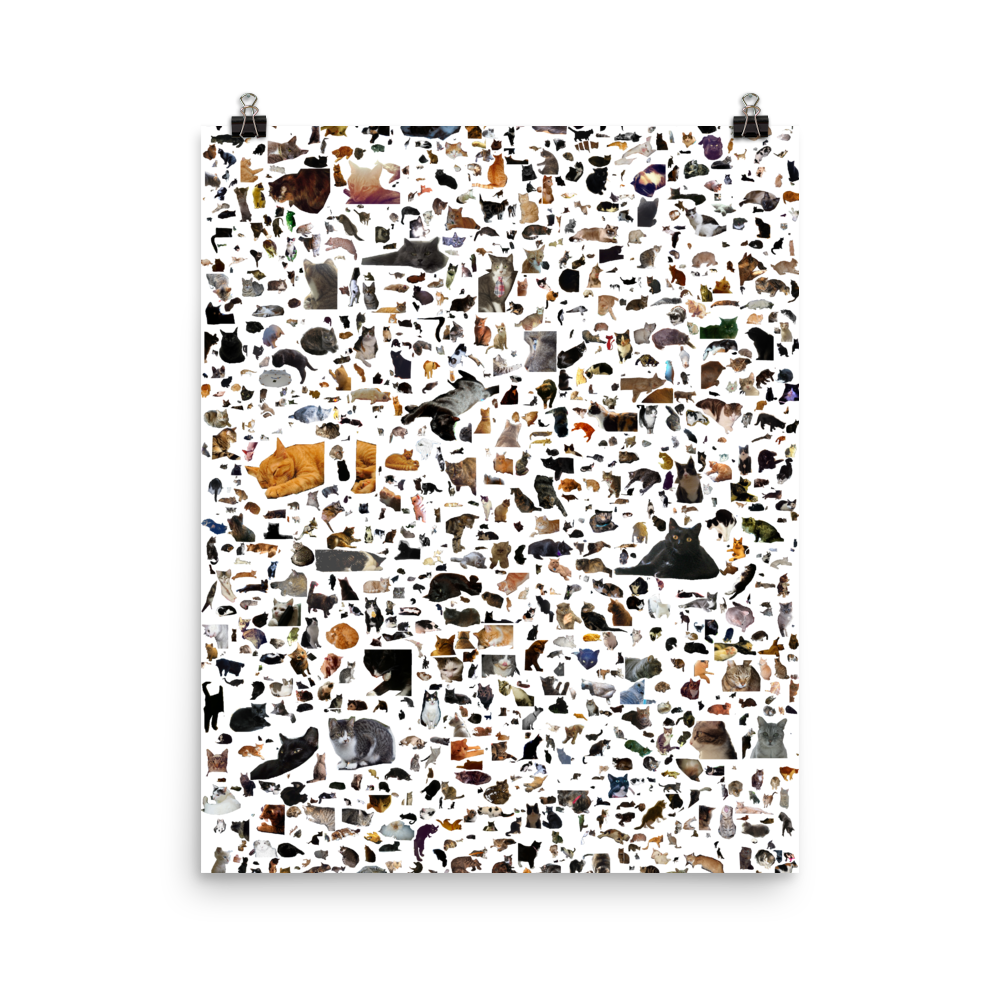

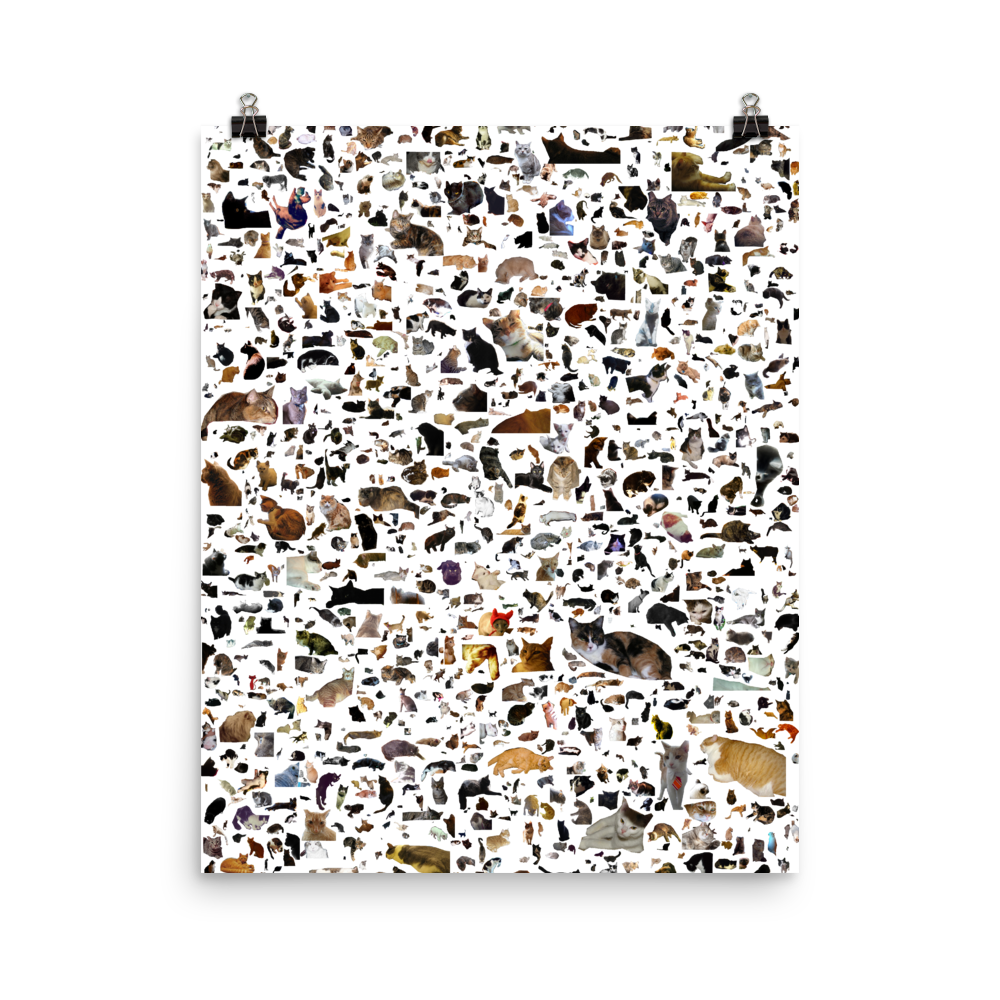

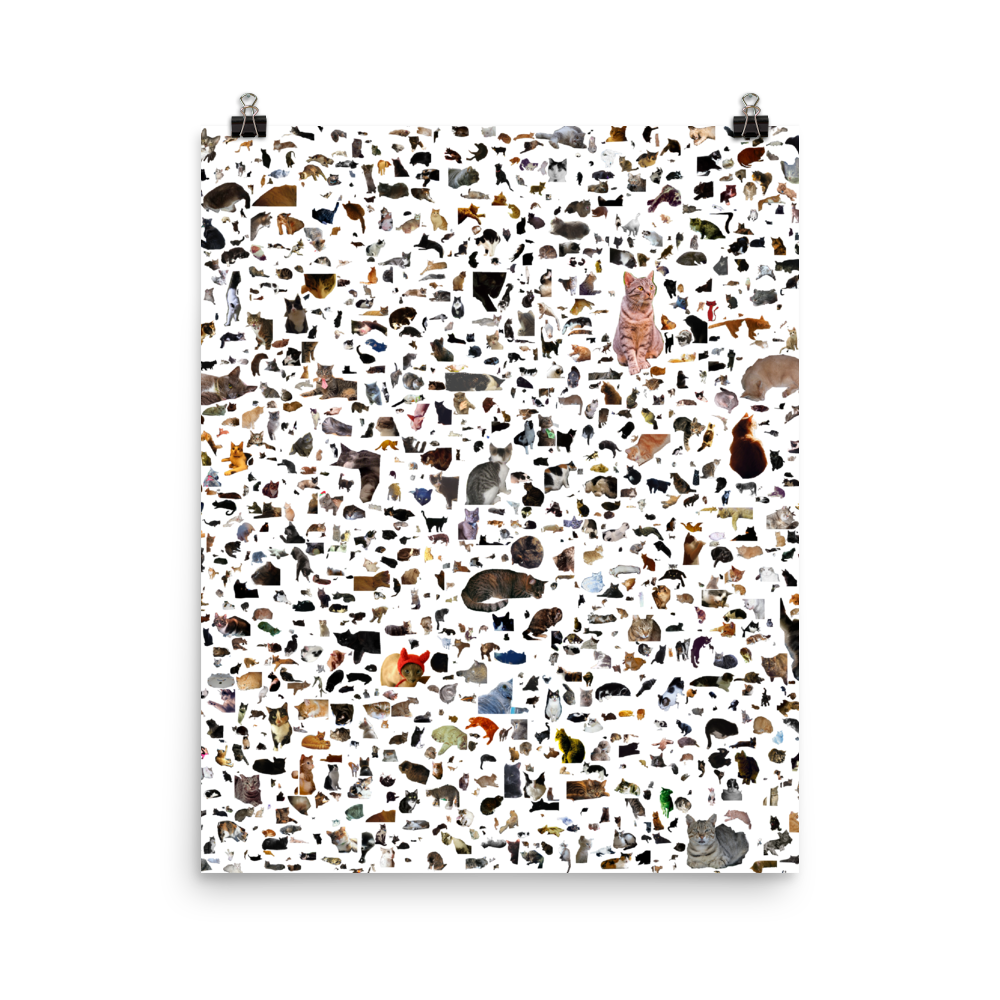

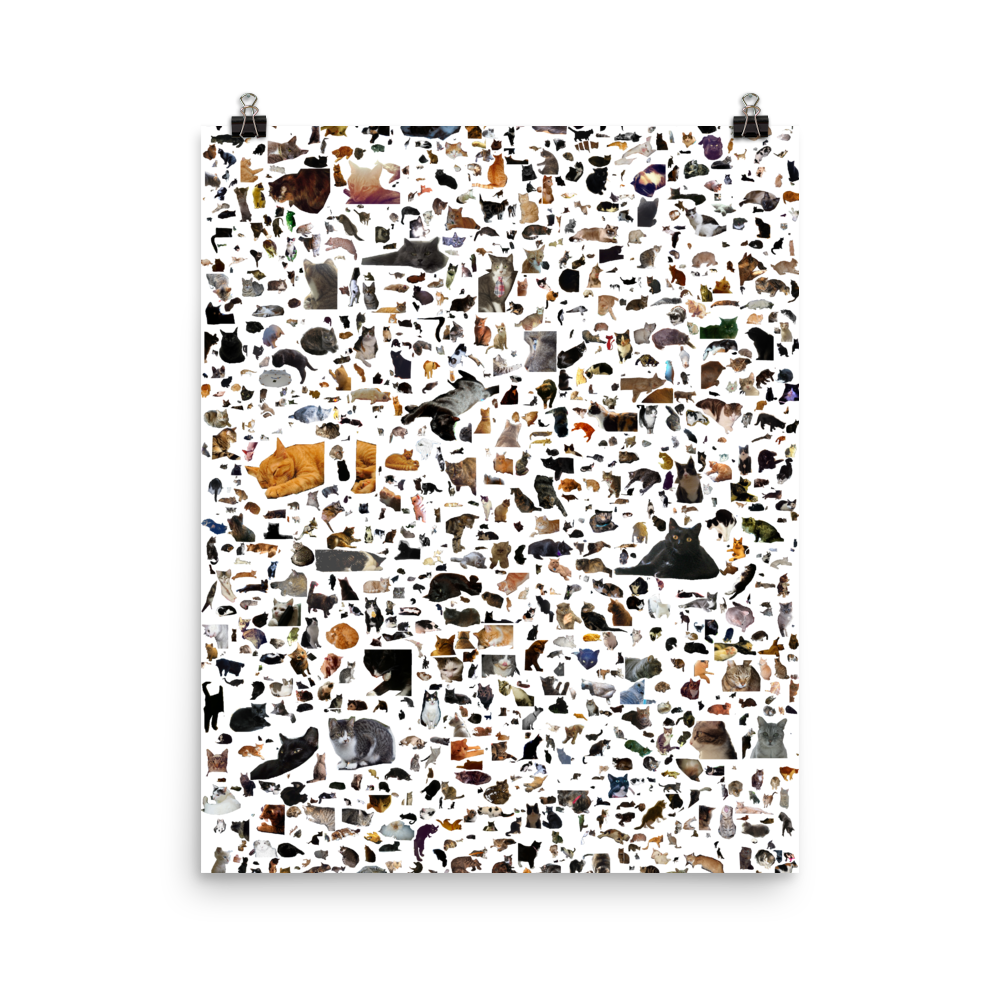

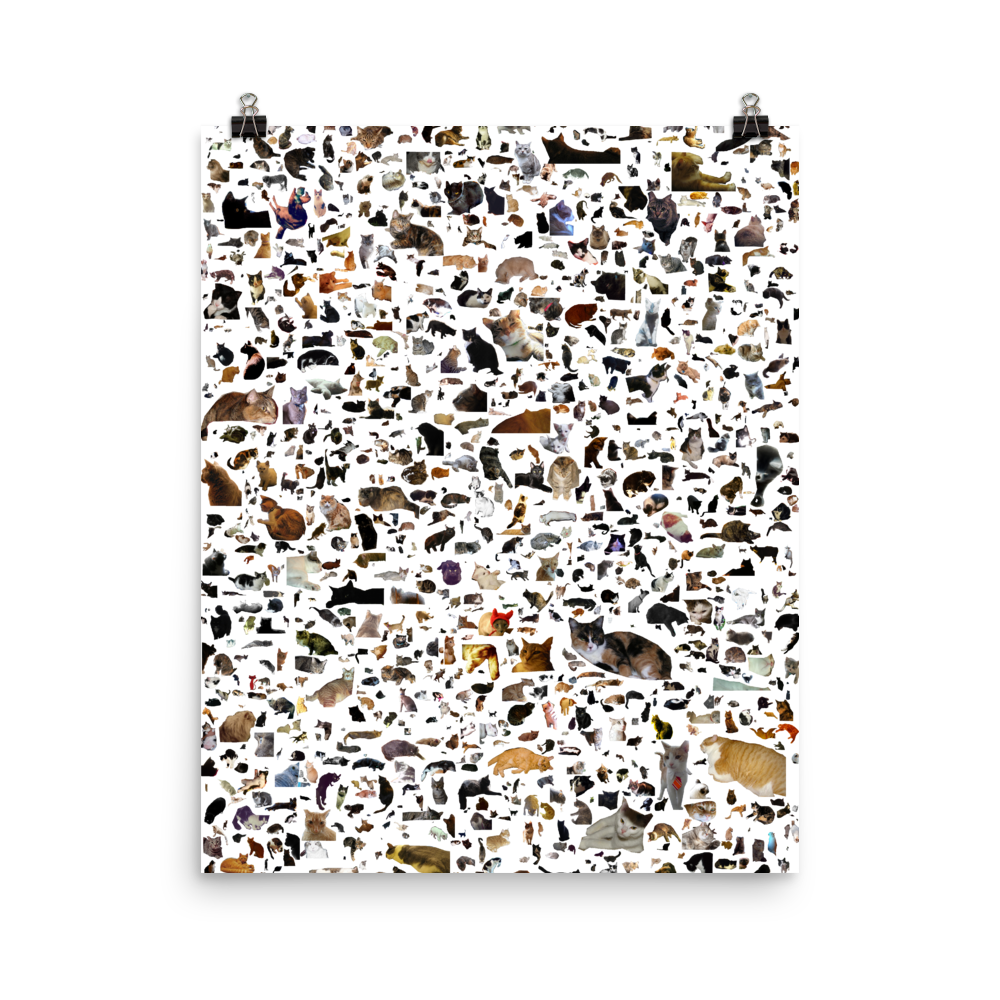

So, intrigued by "how does stuff inside COCO looks like", I ran a segmentation model MASK_RCNN through it to extracted thousands of items of each classification:

The images found are weirdly beautiful...

A is for Airplane ✈️

B is for Banana 🍌

C is for Cat 🐈

D is for Donut 🍩

...

and of course, P is for Pizza 🍕

⚠️ People make datasets -> Machines learn from datasets -> machines take decisions based on their learnings -> decisions affect peopleBuild your datasets,

defend diversity in datasets,

fight for non-biased datasets,

there's always a human to be made accountable for machine errors.

Remember when there's an issue at the bank/store/insurance and some staff tells you "oh, there is a computer error, I can't do anything about it"… well, that's a cheap excuse; computer errors rarely exist, those are mostly human errors made by the human that programmed the thing, the human that installed it or the human operates it.

and.. bad news, because things are about to get worst with Machine Learning and Artificial Intelligence, as more decisions are delegated to seemingly inscrutable algorithms, you or your actions can be easily mislabelled, and then, it might be very hard to prove the machine wrong, because we know how to program AI, but we don't really know how/Why decisions are taken within the AI. 🤷

👉 Get a nice C is for CAT 🐈 poster here ->