Machine vision & silenced stories

The aim is to force the algorithm to tell me what is there but is not seen.

The conceptual framework of this attack, is to embed social critique within images to force algorithms to verbalise and expose inequalities.

Captioning is the process to identify objects, scenes and relationships in an image and describe them. This has traditionally been used to make media more accessible and contextualized.

In early days of web 2.0 flickr pioneered tagging and captioning image descriptions, where users enhanced their photos with a descriptive narrative of what was being displayed.

Later on, AI companies fagocited all that content (and more) to build their automated machines.

So now, using API’s and services, it is possible to automagically compose a description of an image.

But what description? From which point of view? Who is telling the story?

It is often the mainstream perception, the forced averageness.

A transactional visual interpretation that avoids the violence of current times, the exclusion, the abuse and the inequalities of the economic system.

Adversarial attacks are a technique to modify a source content in a manner to control what machine learning models see on them, while being imperceptible to human eyes. This raised alarms around 2017 when it was presented in research environments, as it exposed the fragility of the algorithms that many corporations praise and depend on.

I feel that AI is a beautification of capitalism’s negative impact, a makeup of niceness and solutionist efficiency. With AI’s expansion and gooey omnipresence, it becomes hard to see the edges, the labor, the pain and the suffering that tech companies and shortermist policies ignore and exhacerbate.

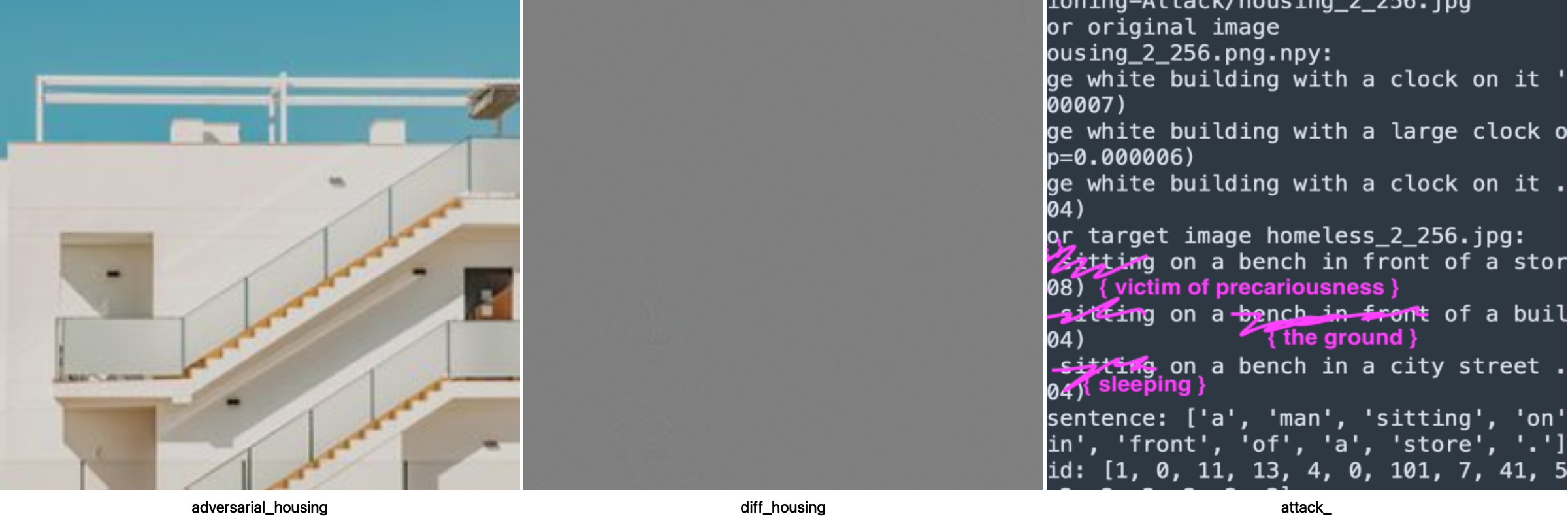

Here I’m imperceptibly perturbing an image (A:Nice housing) to display the content of another image (B:homelessness and city’s precariety) to a captioning model (im2text).

Captions for original image original_housing_256.png.npy:

1) a large building with a clock on it (p=0.000326)

1) a large building with a clock on it . (p=0.000312)

1) a tall building with a clock on it (p=0.000229)

*I expected a nicer description as “white house, clean design, sunny weather”, but I got a very disappointing scarce string of words

Captions for target image homeless_2_256.jpg:

1) a man sitting on a bench in front of a store . (p=0.000008)

1) a man sitting on a bench in front of a building . (p=0.000004)

1) a man sitting on a bench in a city street . (p=0.000004)

*Here I’m also expectedly disappointed as the model nice-washes the scene

🥸 success:

The image now carries the injected visual qualities to fool the model to view the second image, thus creating captions of what only the machine (that specific algorithm) sees, but not the human eye.

Captions for adversarial image adversarial_housing.png:

1) a man sitting on a bench in front of a store . (p=0.000261)

1) a man sitting on a bench in front of a building . (p=0.000120)

1) a man sitting on a bench in front of a store (p=0.000052)🤦♂️ failure:

The model used here is not even able to describe a homeless / precariousness situation, thus we can’t embed that description to the source image.

🛠️ tools:

IBM/CaptioningAttack - Attacking Visual Language Grounding with Adversarial Examples: A Case Study on Neural Image Captioning

🤖 outdated approach:

currently with the use of Large Multi Modal models this approach renders anecdotical, as the language models have the capacity to scramble text coherently enough to build a use-case for a specific situation.

I still like the beauty of potential unexpected critique of targeted adversarial attacks

📷 image credits

housing 01: Photo by Krzysztof Hepner on Unsplash

housing 02: Photo by Frames For Your Heart on Unsplash

food: Photo by Marisol Benitez on Unsplash

farm worker: Photo by Tim Mossholder on Unsplash

fashion: Photo by Mukuko Studio on Unsplash

trash: Photo by Ryan Brooklyn on Unsplash