Results:

Browse / Download 150 textures [google drive]

Here a curated selection of specially beautiful textures and normal maps

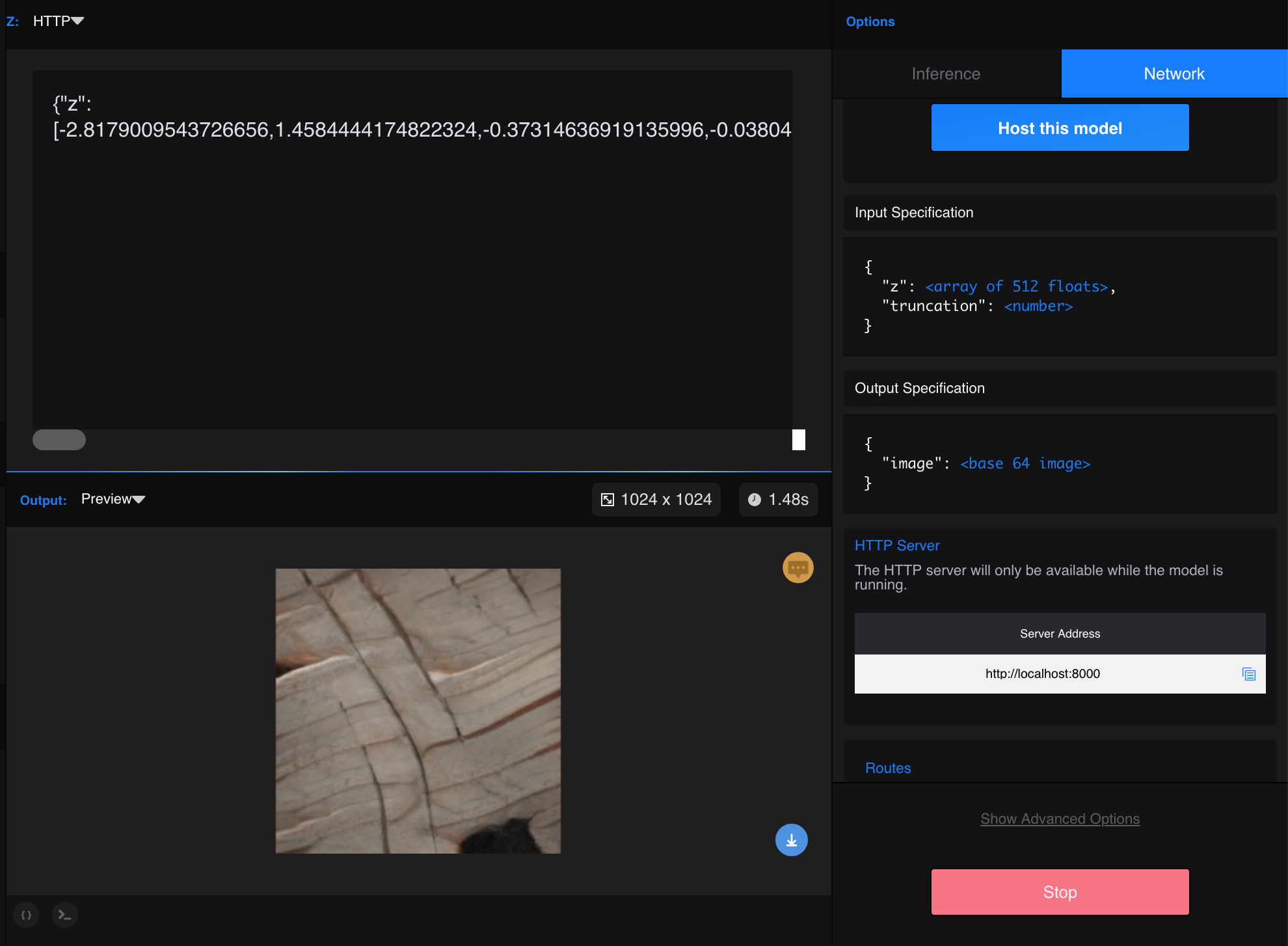

Play / Models released at Runway

Motivation:

Having extensively used CAD & 3D software packages as part of my product designer practice, I personally find the task of creating new materials tedious and expectable, without a surprise factor. On the other and, with my playing with GANs and image-based ML tools has a discovery factor that keeps me engaged with rewards of nice findings while exploring the unknown.

So, how could we create new 3D materials using GANs?

Proof of concept:

In order to test the look & feel of ML generated 3D materials, I ran a collection of 1000 Portuguese Azulejo Tiles images through StyleGAN to generate visually similar tiles. The results are surprisingly nice, so this seems to be a good direction.

Next step was to add depth to those generated images.

3D packages can read BW data and render it as height transformation (bump map), if this is done with the same image used as texture, results are ok but not accurate, as different colours with similar saturation will show with the same height :(.

So, I had to find translation tool to identify/segment which part of the tiles are embossed or extruded to add some height detail.

To add depth to those tiles, I ran some of them through DenseDepth model, and I got some interpertations of depth as grayscale images, not accurate probably because the model is trained with spatial scenes (Indoor and outdoor), but the results were beautiful and interesting.

Approach

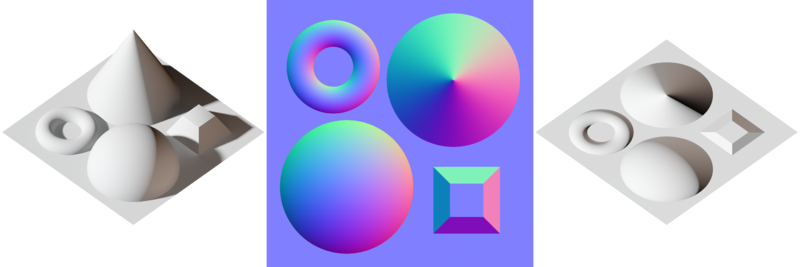

The initial idea is to source a dataset composed of texture images (diffuse) and their corresponding normal map (a technique used for faking the lighting of bumps and dents. Normal maps are commonly stored as regular RGB images where the RGB components correspond to the X, Y, and Z coordinates, respectively, of the surface normal).

And then ran those images through StyleGAN.

It would ideal be to have two StyleGAN's to talk to each other while learning, so they'd know which normal-map relates to which texture... But doing that is beyond my current knowledge.

A workaround could be to stitch/merge both Diffuse + Normal textures into a single image, and ran it through the StyleGAN, with the hope that it would understand that there are two different and corresponding sides of each image, and thus, generate images with both sides... for later splitting them in order to get the generated diffuse and the corresponding generated normal.

But first, I need to see if the model is good at generating diffuse texture images from a highly diverse dataset containign bricks, fur, wood, foam... (probably yes, as it did with the Azulejo, but needs to be tested).

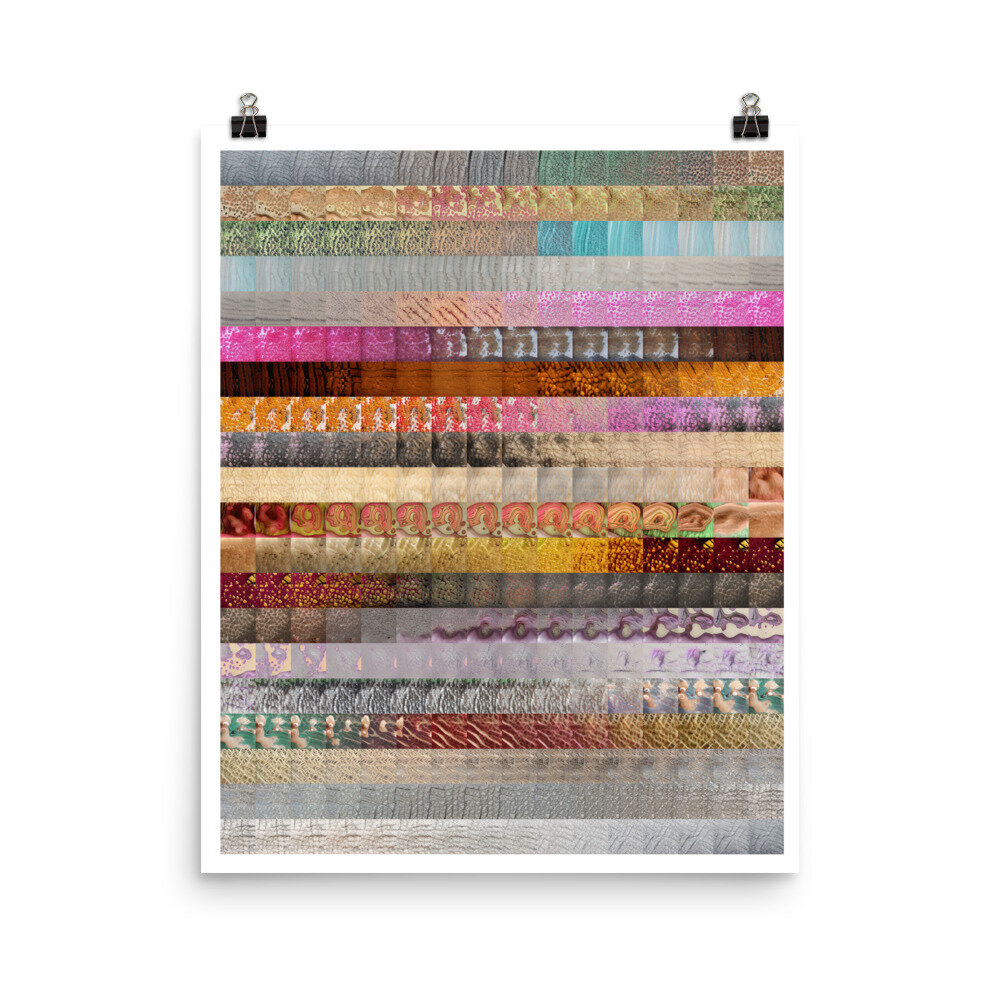

Diffuse Texture generation

The Dataset chosen is Describable Textures Dataset (DTD) a texture database, consisting of 5640 images, organized according to a list of 47 terms (categories) inspired from human perception. There are 120 images for each category.

The results are surprisingly consistent and rich, considering that some categories have confusing textures that are not “full-frame” but appear in objects or in context. i.e: “freckles”, “hair” or “foam”.

Describable Textures Dataset (DTD)

Normal Map generation

Finding a good dataset of normal-maps has been challenging, since there's an abundance of textures for sale but a scarcity of freely accessible ones. Initially I attempted to scrape some sites containing free textures, but it required too much effort to collect few hundred textures.

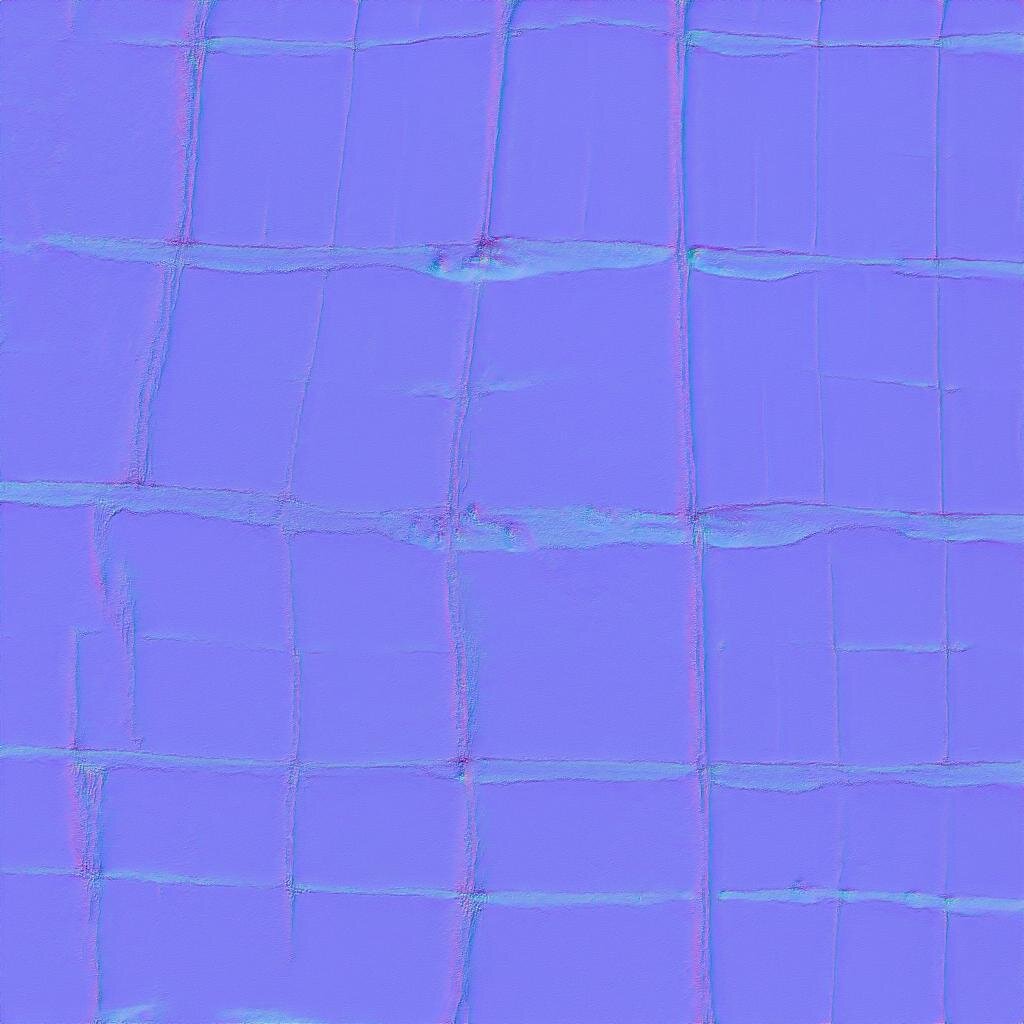

A first test was done with the dataset provided by the Single-Image SVBRDF Capture with a Rendering-Aware Deep Network project.

From this [85GB zipped] dataset I cropped and isolated a subset of 2700 normal map images to feed the StyleGAN training.

Dataset: Normal Maps

StyleGAN was able to understand what makes a normal map, and it generates consistent images, with the colors correctly placed.

I then ran the generated normal maps through blender to see how they drive light within a 3D environment.

Diffuse + Normal Map single image StyleGAN training.

Now I knew that StyleGAN was capable of generating nice textures and nice normal maps independently, but I needed the normal maps to correlate to the diffuse information. Since I wasn’t able to make two stylegans talk to each other while training, I opted for composing a dataset containing the diffuse and the normal map within a single image.

This time I choose cc0textures which has a good repository of PBR textures and provides a csv information that can be used to download the desired files via wget.

Dataset: 790 images

The generated images are beautiful and they work as expected.

StyleGAN is able to understand that both sides of the images are very different and respects that when generating content.

it works! The normal maps look nice and correspond to the diffuse features.

What if?…

The diffuse images generated with the image-pairs are a bit weak and uninteresting, because the source dataset isn’t as bright and variate as the DTD. But unfortunately the DTD doesn’t have normal maps associated. :(

I want a rich diffuse texture and a corresponding normal map… 🤔

Normal map generation from source diffuse texture

I had to find a wat to turn any given image into a somehow working normal map. To do this, I tested Pix2Pix (Image-to-Image Translation with Conditional Adversarial Nets) with the image pairs composed for the previous experiment.

I trained it locally with 790 images, and after 32h of training I had some nice results:

Pix2Pix generates consistent normal maps of any given texture.

Chaining models

Workflow idea:

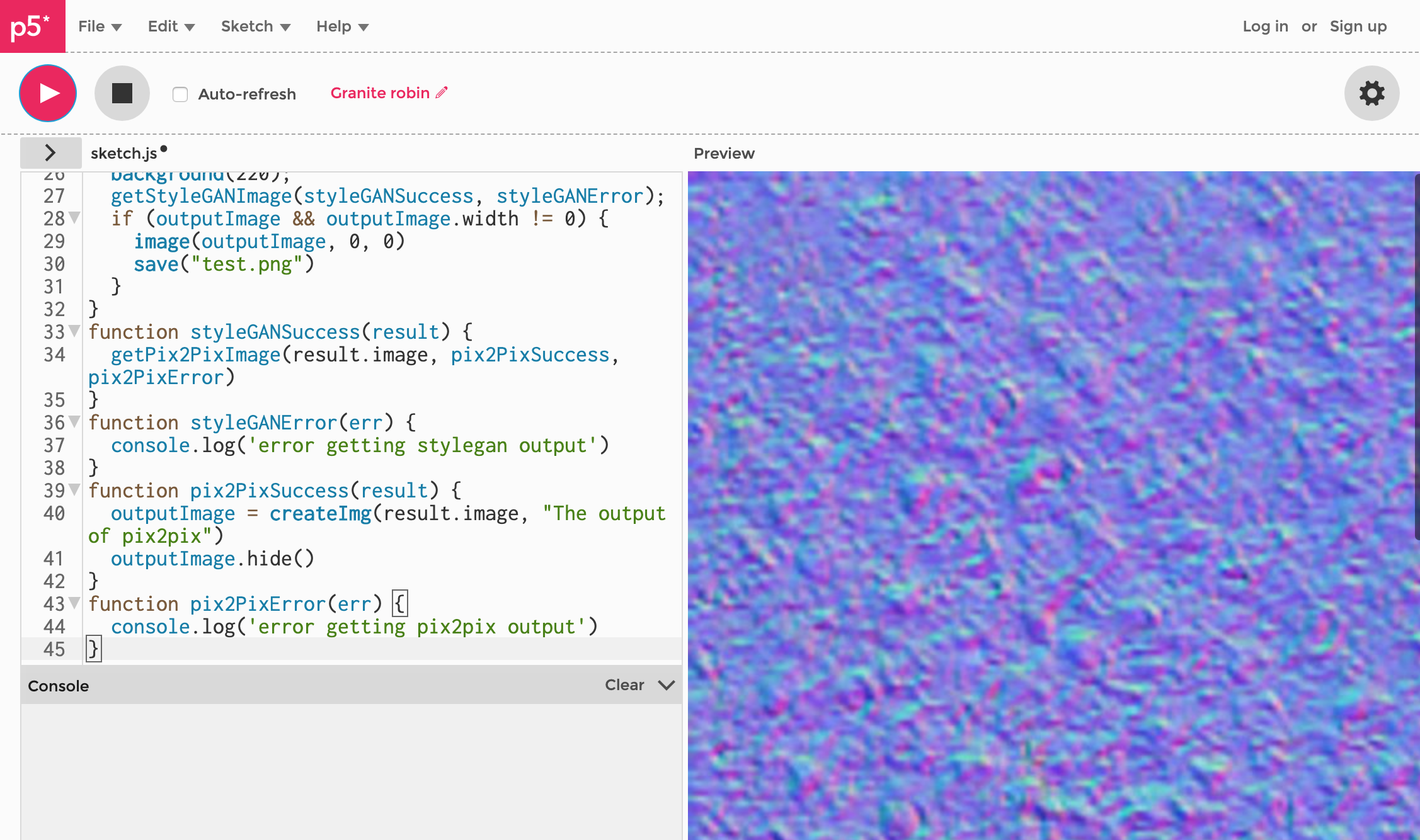

Diffuse from StyleGAN -> input to Pix2Pix -> generate the corresponding normal mapFirst I had to brig the Pix2Pix trained model to Runway. [following these steps].

Then I used StyleGAN’s output as Pix2Pix Input. 👍

With the help of Brannon Dorsey and following this fantastic tutorial from Daniel Shiffman, we were able to run a P5js sketch to generate randomGaussian vectors for StyleGAN, and use that output to feed Pix2Pix and generate the normal-maps.

Final tests / model selection

From all the tests, the learnings are the following:

Stylegan for textures works good - [SELECTED] - dataset = 5400 images

StyleGAN for normal maps works good, but there’s no way to link the generated maps to diffuse textures - [DISCARDED]

StyleGAN trained with a 2-in-one dataset produces nice and consistent results - but the datasets used did not produce varied and rich textures. [DISCARDED]

Pix2Pix to generate normal maps from a given diffuse texture works good. [SELECTED] - dataset = 790 image pairs

Pix2Pix trained with 10000 images produced weak results. [DISCARDED]

The final approach is: StyleGan trained with textures from the DTD dataset (5400 images) -> Pix2Pix trained with a small (790) dataset of Diffuse-Normal pairs from cc0Textures

Visualization & Interaction

I really like how runway visualises the latent space using a 2D grid to explore a multi-dimensional space of possibilities. (in resonance with some of the ideas in this article: Rethinking Design Tools in the Age of Machine Learning ).

It would be great to build a similar approach to explore the generated textures within a 3D environment.

To visualise textured 3D models on the web, I explored Three.js and other tools.

babylonjs seems the best suited for this project.:

A quick test with the textures generated from StyleGAN looks nice enough.

Trying to add the grid effect found in runway makes the thing a bit slower but interesting. The challenge is how to change the textures on-the´fly with the ones generated by StyleGAN/Pix2Pix…

After some tests and experimentations I managed to load video textures, and display them within a realtime web interface. 👍

mouse wheel/pinch= zoom

click/tap + drag / arrow keys = rotate

*doesn't seem to work with Safari desktop

Learnings & Release

The StyleGAN behaved as expeced: producing more interesting results when given a more rich and varied dataset.

Pix2Pix model has proven strong enough, but results could be better. For next iterations of this idea I should explore other models. like MUNIT or even some StyleTransfer approach.

Both checkpoints have been released and are free to use at Runway. StyleGAN-Textures + Diffuse-2-Normal

You can also download the model files here for your local use.

Thanks & next

This project has been done in about two weeks, within the Something-in-Residence program at RunwayML. Thanks.

I’ve learned a lot during this project and I’m ready to build more tools and experiments blending Design/AI/3D/Crafts and help others play with ML.

For questions, comments and collaborations, please let me know -> contact here or via twitter.

Background & Glossary

Some impressive work has been previously done in that area:

Some prints: