A camera lens for Snapchat aimed to point at a drawing and use machine learning to colorise it.

detailed video ->

the same, 90% longer

Project developed as part of the AR Creator Residency Program at SnapChat - a global initiative designed for artists and developers to explore new ways to bring their work to life with augmented reality.

Using the Lens Studio software and the Machine Learning component.

Behind the Scenes

initial thought: develop a lens to colorise drawings.

(without having no idea how to do it 😅)

Previously, I've seen some web-based applications that use machine learning to do that. So, initially, it shouldn’t be hard to make it run on the camera, as a lens.

AI-Powered Automatic Colorization / https://petalica-paint.pixiv.dev/index_en.html

Initial research pointed me to the Styles2Color repository which does exactly that in great detail. Unfortunately I was not able to make it run, and I had to look for an alternative.

I choose Pix2Pix because it is well documented and seemed like a good solution for the task. I set up on my local machine with this repository by Jun-Yan Zhu and started the testing.

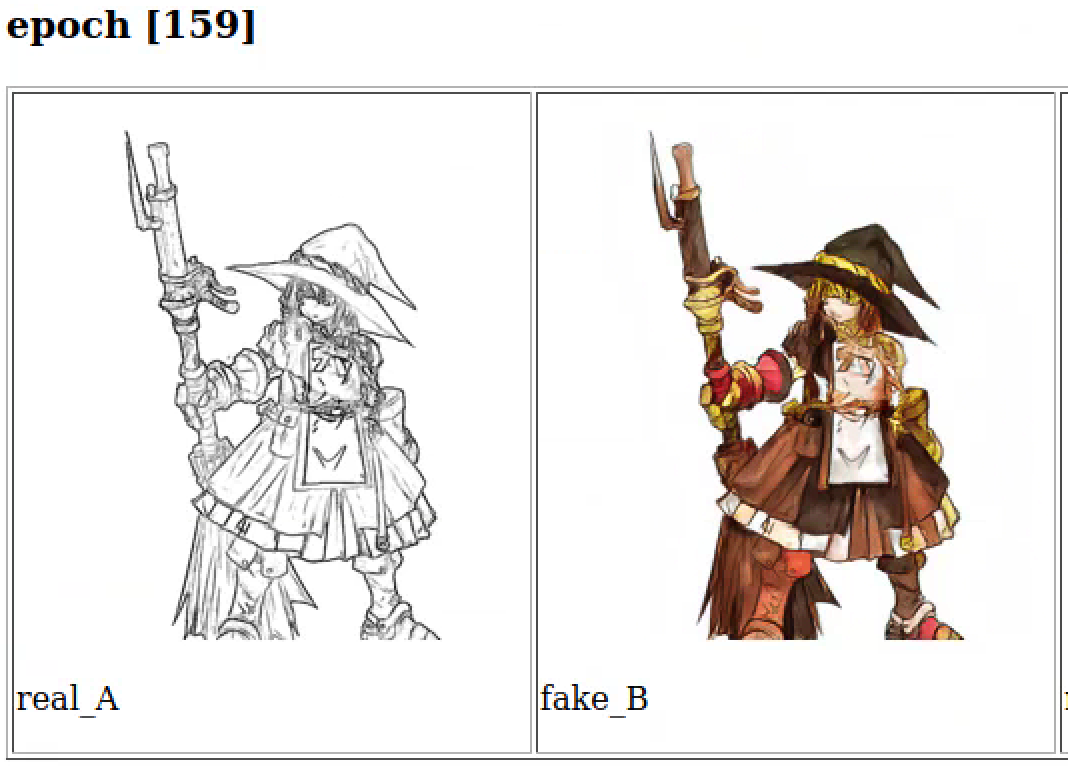

First I had to find a suitable dataset of image pairs, linework -> color, so Pix2Pix could learn how colorized images should look based on a source line drawing.

I found it at Kaggle: Anime Sketch Colorization Pair is a 14k images dataset of Anime drawings (quite NSFW).

Trained Pix2Pix up to 200 epochs, and results were convincing.

But, I hit a roadblock when seeing that the model file generated was over 200Mb, which is an impossible size for fitting it inside a mobile lens :(

Luckily, with the support of Snap team, I was pointed to the work of Char Stiles who previously did a residency and developed a “Statue Lens” using Pix2Pix as well and managed to shrink the model size to 1.1 Mb.

Using her code I trained the model again, and results were surprisingly good.

While the model was working nicely with line drawings, it produced weird outputs when pointed to non-drawings (obviously).

so using Edge-Detection from LensStudio’s Post-Effects library I could try to stylise anything into a line-drawing.

Adding the model to LensStudio is quite straightforward if starting from a SnapML template.

I started with a StyleTransfer lens, and imported the .onnx file with its corresponding Scale and Bias settings.

For the User Interface I opted for something clean and monochromatic to mimic the comic/mange look & feel.

I designed a square canvas to accommodate all kind of screen sizes and placed the 1:1 ratio camera in the center.

layout composed with indistinguishable comic fragments

Since the model behaves very differently if the source image is a drawign or a fake-edge-drawing-style-realworld-image, I had to offer the user the chance to change the effect applied to the camera depending on the usage. To do so, I placed two previews grayscale/edge for the user to choose and use whichever feels better.

User Interaction / Interface layout

Before continuing, a next round of tests was done to try to improve the model’s behaviour.

It appeared that the camera noise was making the model loose the sharpness and color richness I was getting when running it locally.

To try to solve that, I augmented the dataset x4 by adding noise, blur and grayscale versions of the original image-pairs.

Results did not improve dramatically, so the original model was used to build the final lens.

Final touches affected the layout of the user interface because once the lens was published the top part of the screen became unresponsive to touch, rendering the grayscale/edges previews unusable. :(

A workaround was to move the affected elements a bit down, covering the main element, a design decisions that makes the whole interface more crowded but makes it usable.

The ui elements disappear once the user is happy with the results and taps the snap button, resulting in a clean comic-like page with the colorized drawing cantered in the middle of the screen.

credits & thanks:

model: CycleGAN and pix2pix in PyTorch

code tweaked for SnapML from Char Stiles

dataset: Anime Sketch Colorization Pair / kaggle

support from snap team: Olha Rykhliuk - UX / Aleksei Podkin - ml

Tests & FAILS