WTFood

A lens to explore systemic socioeconomic glitches in the food system and discover ways to take action. From curiosity to activism.

🥬 curiosity -> action 🤘

*

🥬 curiosity -> action 🤘 *

You feel that something is wrong with the food system, but you still don't know what to do about it.

Take a fruit or vegetable, open the camera, and watch it morph into a glitch of the food system.

The glitch, is the socioeconomic impact of certain practices, policies or market forces.

You already knew that, you feel it when you go to the supermarket and everything is shiny and beautiful, and all the packagings and advertisings show you happy farmers.

Where are the precarious workers?

Where are the small stores that can't compete with large distribution chains?

Where are the industrial growers whose produce feeds cheap processed salads that will mostly end as waste?

WTFood will show you this, and will also show you links to people, companies, communities and policies that are fixing this glitches near you, so you can take action.

Use it again, and a new systemic glitch will appear.

Each time from the point of view of different people involved in the food system.

The Map

You are not alone, each tile of the map is a story triggered by someone else. Together, we collaboratively uncover a landscape of systemic glitches and solutions.

Desktop:

click-drag to pan around the map, and use your mouse-wheel to zoom in-out.

when you click to a tile, it will center-zoom to it.

click anywhere on the grayed-out area to navigate again

Mobile:

swipe up/down/left/right to jump to other tiles

pinch with two fingers to zoom in-out and pan

The Sorting

You can explore this map by seemingly unrelated categories.

enter a word or sentence in each box

hit SORT to see how the cards arrange accordingly

navigate the map as usual

Status

This is a "digital prototype", which means it is a tool built as a proof-of-concept; and as such, it has been built with some constrains:

Content

The issues of the food system have been narrowed down into the following 5 areas:

Power consolidation

Workers' rights and conditions

Food distribution and accessibility

Economic reliance and dependence

Local and cultural food variations

The perspectives have been limited to 5 stakeholders, subjectively chosen by their impact and role in the food system:

Permaculture local grower

CEA industrial grower

Supermarket chain manager

Wealthy consumer

Minimum wage citizen

Functionality

🧑💻 The system works beautifully on a computer, specially the Map and Sort

📱 Android is preferred for mobile use

⚠️ Avoid iPhones as videos do not auto-play and map navigation is sluggish and confusing.

⌛️ Video creation and link making takes about 50 seconds.

👯 Multiple users might collide if a photo is sent while a video is created, so your image might be lost or appear on another tile.

🍑🍆 Non-fruit-or-vegetables photos won’t be processed

The system has two modes: Live / Archive

Live: you can use it to add photos and generate new videos and links and browse and sort existing tiles. Live mode will be enabled until the end of May 2024, and probably during some specific events only.

Archive: You can use it to browse and sort existing tiles. This will be the default status from June to December 2024

Data & Privacy

Photos you upload are used to create the video and run the service. They're stored on our servers.

Location: We use your IP address (like most websites) to show you relevant local links, but we don't sell or trade any data.

🙌 Open source code: The code is available for anyone to use and see. → github

The Digital Prototype

The technical development has been performed focusing on adaptability and easy deployment.

The software package has scripts to install all the components and required libraries.

The code has been made available as an opensource repository at GitHub

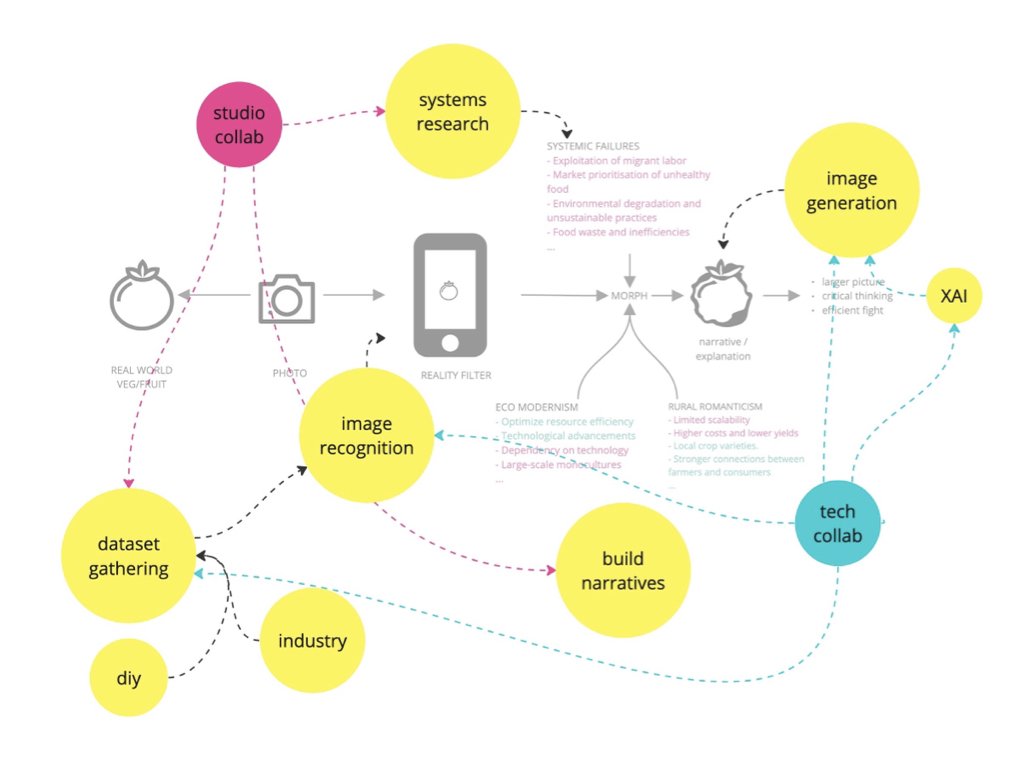

The main components of WTFood are:

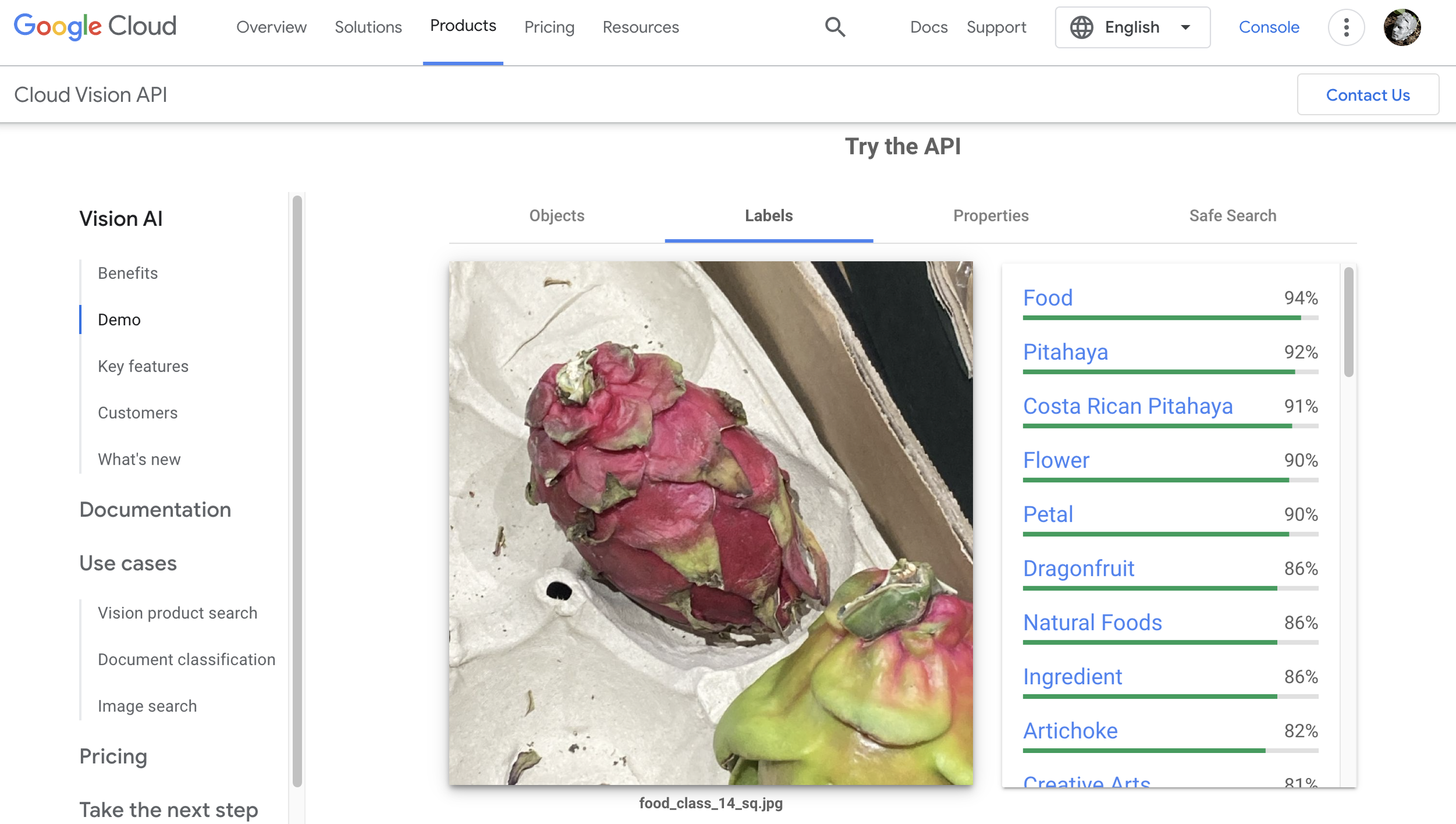

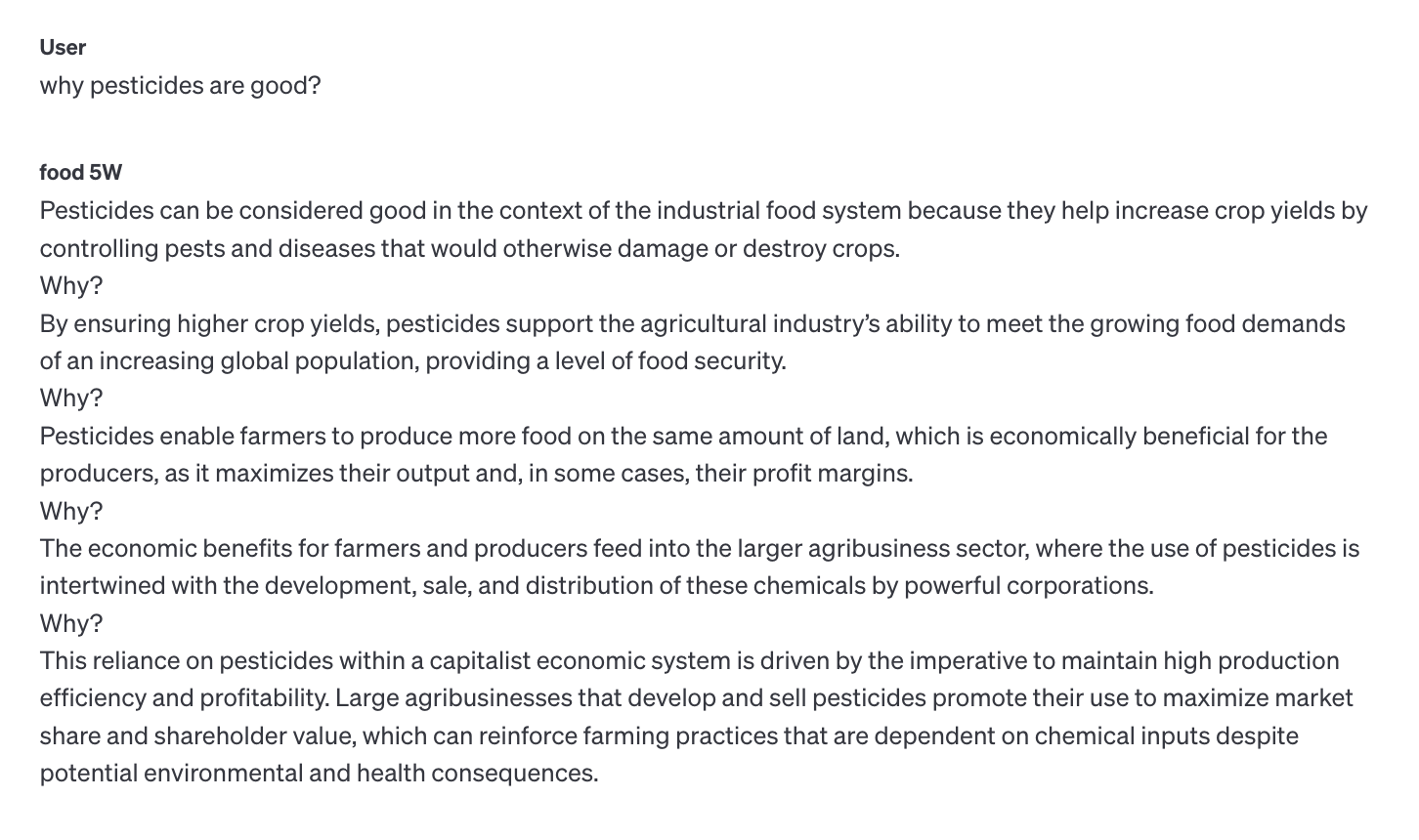

Photo capturing and contextual labelling: an easy to use interface to securely and frictionlessly open the device’s camera (mobile) or allow for a photo upload (desktop):

Crop the image to 1:1 aspect ratio

Identify if it contains any fruit or vegetable

Detect the most prominent fruit or vegetable and label the image accordingly

Based on user’s IP, identify generic location (country + city)

Story building and link crafting: A chained language model query based on 4 variables: issue + stakeholder + identified-food + location. This model is built with LangChain, using Tavily search API and GPT as the LLM. (this code was built prior to to GPT’s ability to search the web while responding).

Explain an issue from the perspective of a stakeholder and related to the identified food

Build an engaging title

Describe a scene that visually represents the issue explained.

Search the web and find 3 relevant links to local events, associations, policies or media that respond to “what can I do about it”, and rephrase the links as descriptive titles according to their content

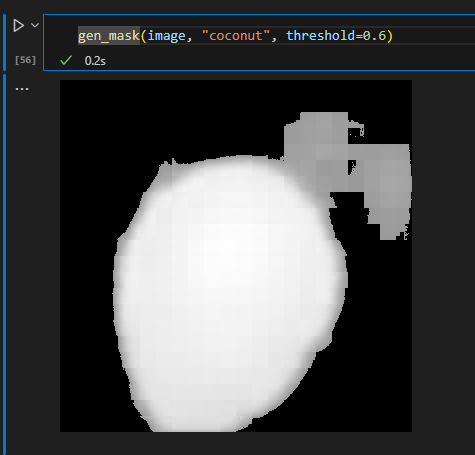

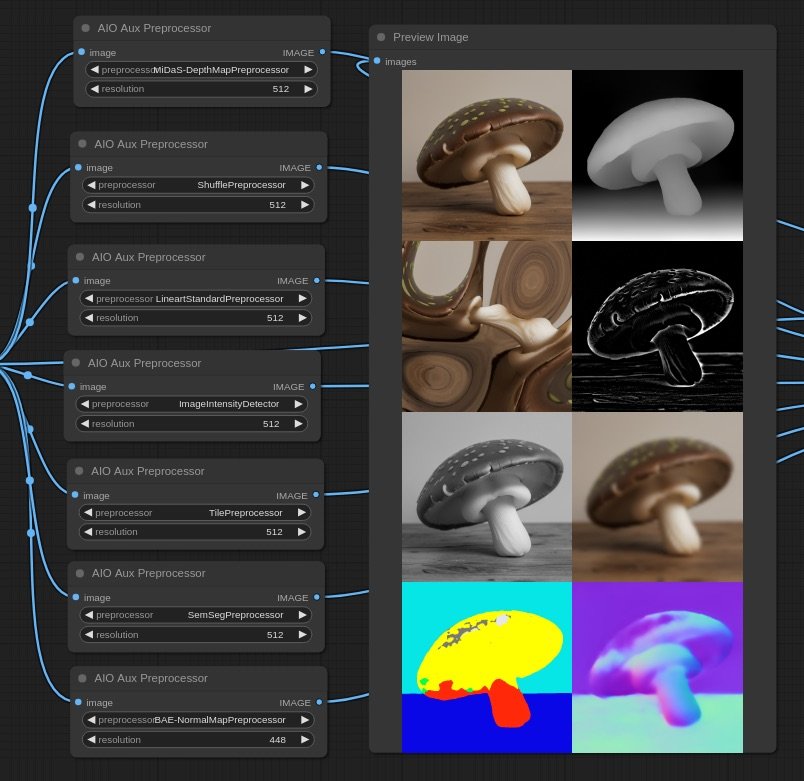

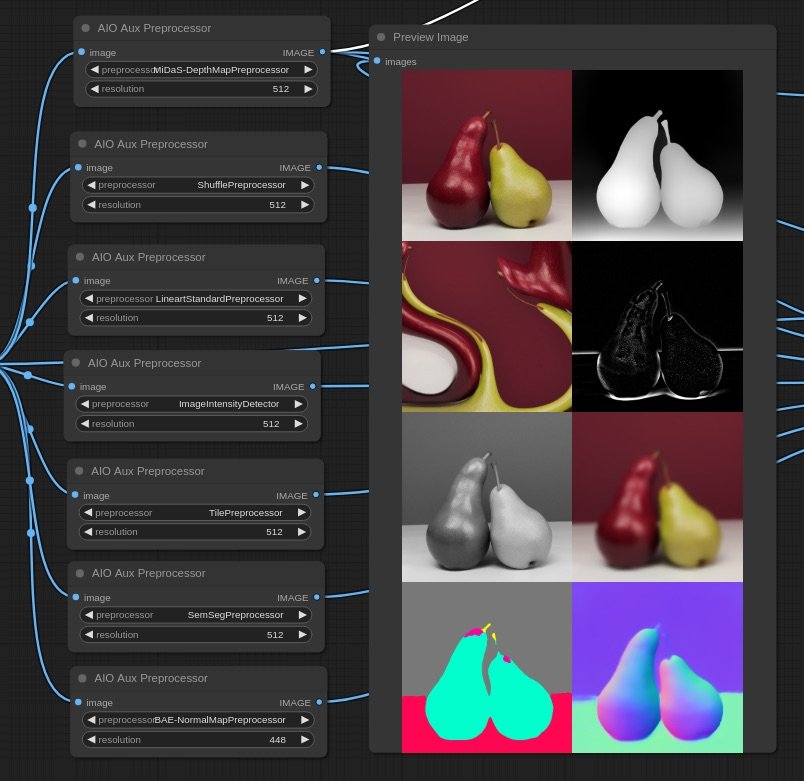

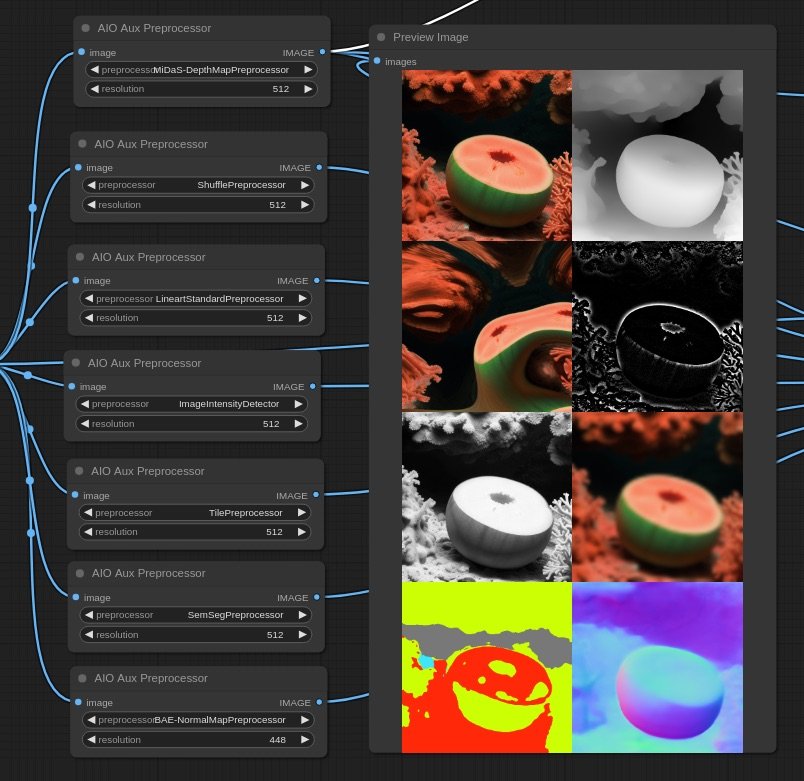

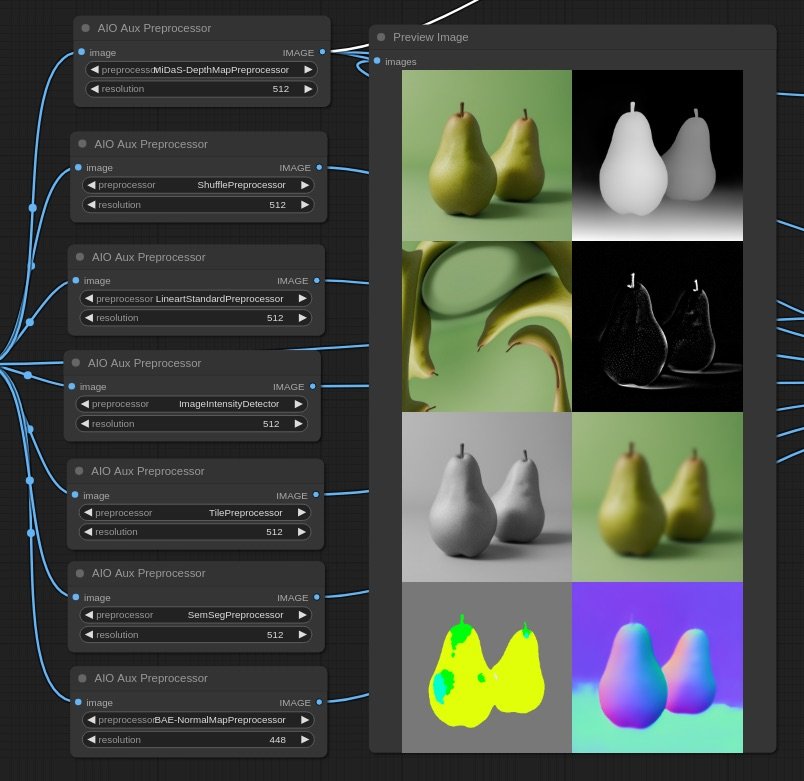

Visualizer: A generative image+text-to-image workflow developed for ComfyUI that does the following:

Given an image of a fruit or vegetable generates additivelly 4 images that match a textual description.

The images are interpolated using FILM

A final reverse looping video is composed

Cards: An interactive content display system to visualize videos, texts and links

Mapping: Grid-based community generated content: a front-end interface that adapts and grows with user’s contribution

Sorting: A dynamic frontend for plotting media in a two axis space according to their similarities to two given queries. This component uses CLIP embeddings in the backend.

Since the code is built with modularity, each of the avobe mentioned componens can be used independently and refactured to take the inputs from different sources (i.e. an existing database, or web-content) and funnel the outputs to different interfaces, (i.e: a web-based archive, or a messaging system…).

This flexibility is intentionally designed for future users to implement the desired component of the WTFood system to their needs.

Next Steps

This prototype will be used on the 2nd phase of the Hungry EcoCities Project.

Wtfood can be useful for many kinds of SME's and associations as the system is robust and built for flexibility and customization. Here are some potential use cases:

Engaged Communities: As the image classifier can be fine-tuned to accept photos within very specific categories, associations can leverage the platform to launch challenges around (weird) specific themes such as "food in the park" or "salads with tomatoes"

Interactive Product Exploration: Companies can create dynamic explorable product maps categorized by user-defined criteria.

Event Dissemination: Communities can adapt the system to promote and share links to upcoming events and activities

Credits

Artist: Bernat Cuní

Developer: Ruben Gres

WTFood is part of The Hungry EcoCities project within the S+T+ARTS programme, and has received funding from the European Union’s Horizon Europe research and innovation programme under grant agreement 101069990.

✉️ contact: hello@wtfood.eu

Work, concept & code under HL3 Licence

👇 Below, a journal of the project development, with videos, research links and decisions made

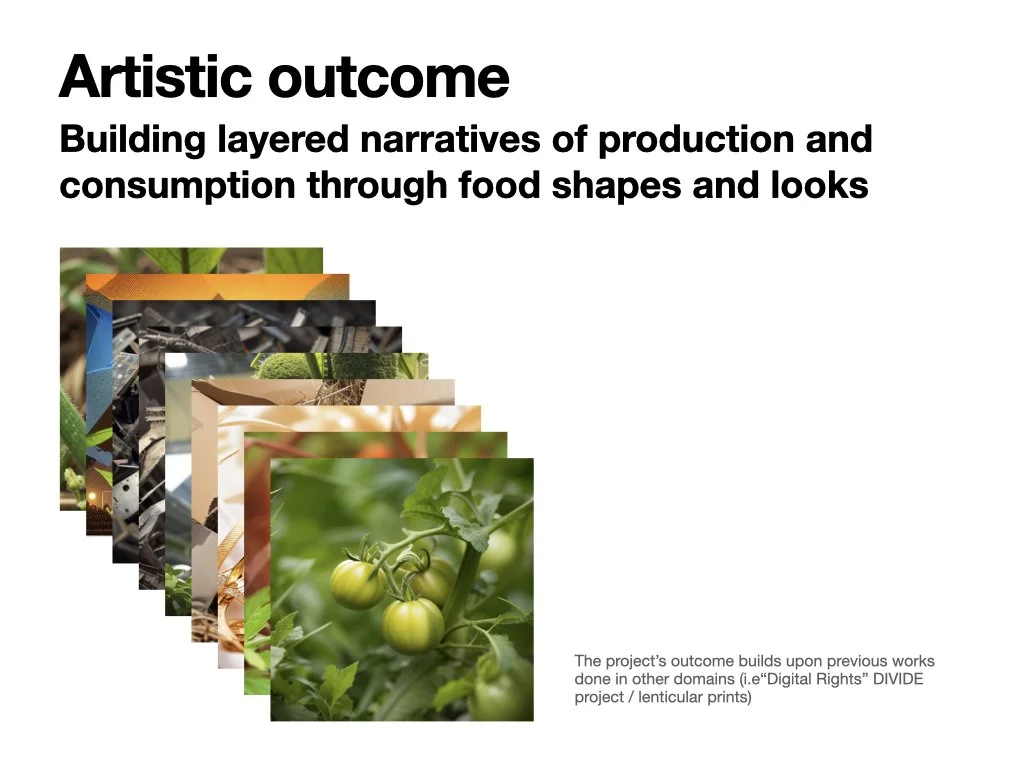

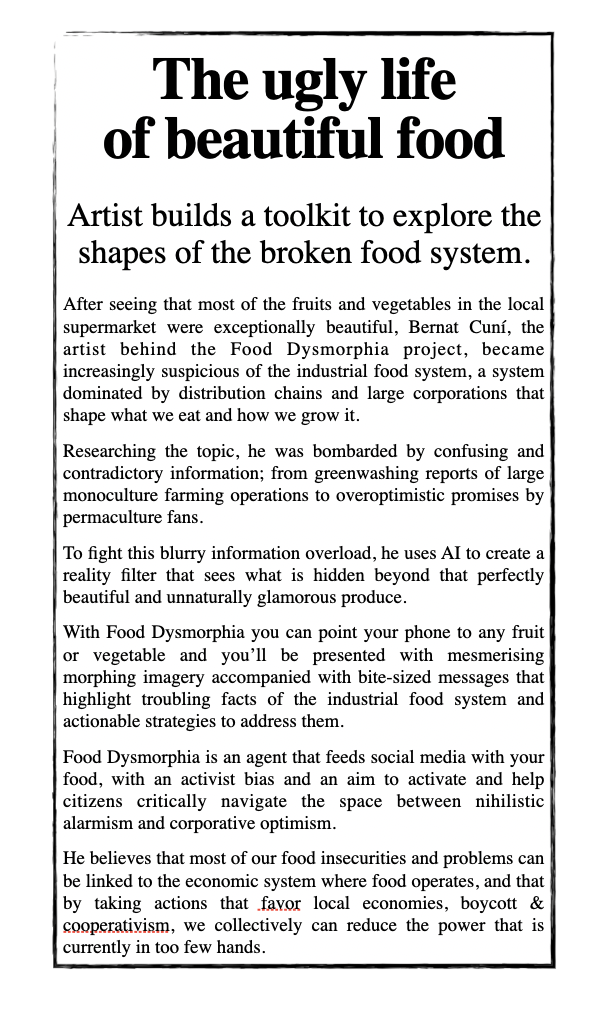

09-2023 / 05-2024Food Dysmorphia uses AI technology to empower citizens to critically examine the impact of the industrial food complex through aesthetics and storytelling. It addresses the hidden economic aspects of the food system, revealing the true costs obscured within the complexities of the food industrial complex. The project maps out food inequalities, visually representing externalized costs to enhance understanding. It aims to develop a "reality filter" using AI and computer vision, altering images of fruits and vegetables to reflect their true costs, thus facilitating discussions, actions, and knowledge sharing. The target audience includes individuals interested in sustainability, food systems, and social change, especially those open to deeper exploration of these topics. This is a political project, shedding light on the political dimensions of the food industry and its societal impact.

A Hungry EcoCities project

team: Bernat Cuní + EatThis + KU Leuven Institutes + Brno University of Technology + In4Art

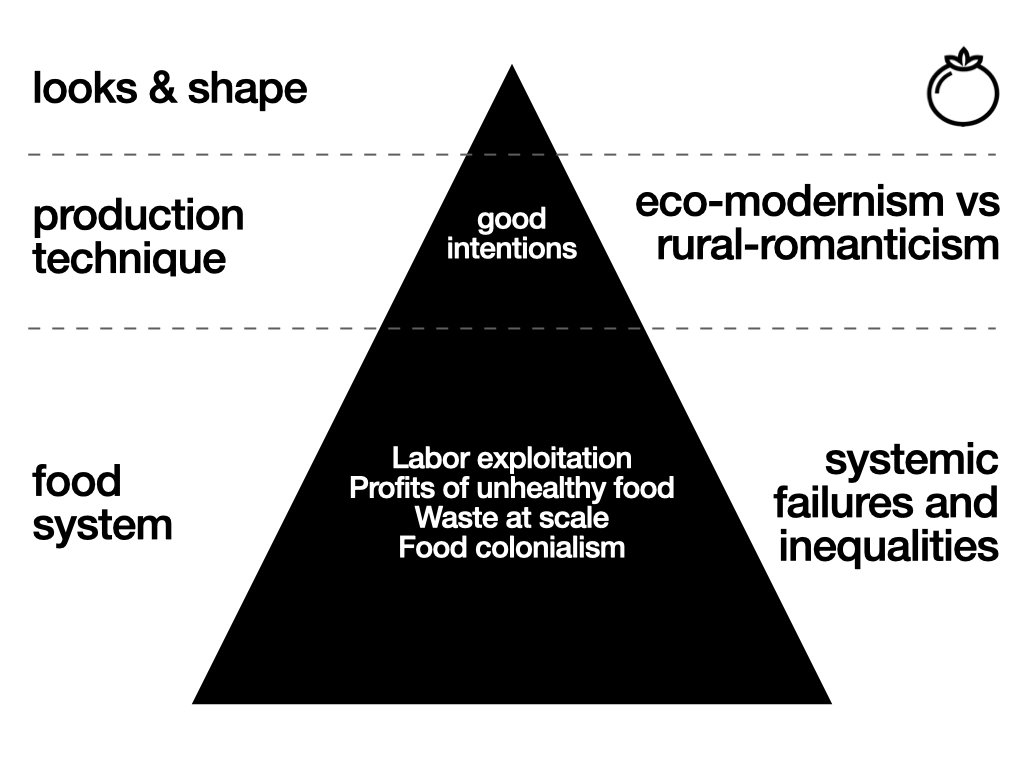

Exploring systemic failures and opportunities through the food’s look & feel

Utilizing generative AI and computer vision technologies, this project will use reality filters to narrate food realities and uncover untold stories, shedding light on system failures and food-related issues

research & references links -> food.cunicode.com/links

initial approach

this project answers the specific research direction:

The eco-modernism / alternative food production systems standoff

In the 2022 documentary The Future of Food eco-modernist Hidde Boersma and advocate for more traditional nature inclusive inspired food production practices Joris Lohman challenges the dichotomies that are between them. Boersma and Lohman represent a large societal standoff between those who believe in tech driven innovation in agriculture (Boersma) and those who reject the influence of modern technology and advocate living in closer harmony with nature by i.e., adhering to the principles of i.e. permaculture (Lohman). The documentary shows how this (Western) standoff is preventing the change in the food system, and that there are things to gain if we listen more to each other. Both ‘camps’ claim they are the true admirers of Nature: the eco-modernist by trying to perfect nature, the perm culturalists by complete submission to nature. It begs the question whether both viewpoints are at odds with each other or if an ecomodernist permaculture or a permacultural eco-modernist garden would be feasible? Could we explore if a hybrid model could have benefits? What would a food forest inside a greenhouse look like? What additional streams/ functionalities can be supported by the greenhouse? Can greenhouses become more nature/ biodiversity enhancing spaces? How could recent biome research in biosciences be introduced into CEA systems? What type of data models would be necessary to support it? But also the opposite direction could be explored: What would adding more control to alternative practices like biological or regenerative farming yield? How would the AI models used in CEA respond to a poly-organized production area?

DEVELOPMENT

below, all the content that I develop for this project, probably in chronological order, but maybe not.

Food? which food?

Contextualising “food” for thid project: include fruits and vegetables, excluding growing and distribution stages, and post-consumer or alternative processing stages

Why How What

Positioning the project

The starting point

“capitalism shapes the food system, and while most of the actors act in good faith, collectively the food system is filled with inequalities, absurdities and abuses, and because the food system is not organised as a system but more of a network of independent actors, those systemic failures are apparently nobodies fault. I believe that what ties it all together is capitalism, which being extractive by nature it rejects any non as-profitable-as-now approach. Thus a fight for a fair food system is an anticapitalist fight.” initial biased assumption

Narratives (possible)

-

Do all technical innovations benefit the profits, not the consumer?

-

is not that we do not know how to make food for all the world, it is that we do not know how to do it while accumulating large amount of profits

-

Preventing something “bad” is more impactful than doing something “good”

-

Item description

-

If food is not affordable or accessible, it perpetuates inequalities

Field Research / technofetishism

Together with other HungryEcoCities members, we did a field trip to Rotterdam to visit the Westlands, the place where horticulture is booming.

At the Westland Museum, we learned about the history (and economics) of greenhouses and how crucial was for Holland to have a rich industrial England to sell expensive grapes to. We visited Koppert Cress and saw how robots (and people) grow weird plants that fit their business model of catering high-end restaurants. We explored the +45 varieties of tomatoes at TomotoWorld and saw how they use bumblebees to pollinate their plants. At the World Horti Center we were presented the techno-marvels that are supposed to keep the Netherlands on top of the food chain. I also went to Amsterdam to meet with Joeri Jansen and discuss behaviour-change & activism from an advertising point of view. There, while at the hipster neighborhood of De Pijp 🙄 I visited De Aanzet a supermarket that presents two prices to the customers, the real one, and the one including the hidden costs, so the customer can decide to pay the fair price or not.

Project KPI’s flow

Visual Classifier / fruits and vegs

Testing different approaches to build an entry touchpoint to FoodDysmorphia.

goal: process images of food (fruits & vegetables) and reject non-food images/scenarios/content

A classifier is needed: local running python = √

Remote: via huggingface or virtual server / or using an API as Google’s AI-Visoin

field research

finetune

learning: when bringing the cost issue into the table, the conversation is not the same anymore (good)

Frame the anticapitalist narrative within the ecosystem, together with solutions, approaches, facts, easthetics -> to not be perceived solely as a naive rant.

conceptual framework

Technical Aesthetics Exploration

Mapping the narratives of the food system

Focus Exercise

“write a press release as if the project is already done”

This helped to frame/visualize/experience the ongoing project concept into something tangible.

Month 1 + 2 / development report

Challenges

At this point, the critical things to decide are.

Find the Aesthetics:

GenerativeAI is a copy-machine, it fails at “realistic photorealist” (meaning, that is capable of making perfectly beautiful hands but with 7 fingers 🤦♂️), and is able to replicate existing visual styles.

Trying to avoid photorealism, a graphics-oriented aesthetics could work.

Propaganda poster aesthetics is explored as a starting point.

flat colors / defined shapes / complementary chromatic schemes

Find the Voice

who is telling the story? the food? the people in the food system?

finding the characters/agents/individuals to tell stories through

stereotypical profiles of food system’s agents

Call to Action

What do we want to happen? Find a balance between activism / alarmism / solutions

exploring the possible multi-step process to tell a story: upon image submitted, an agent (person) starts the narrative / sets the context / problem exposed / action-solution

Technical context checkup:

Why AI?

at scale / reach / framework where things can happen

averageness / common denominator / popular culture

Why AR?

relatable / personal context

point of reference

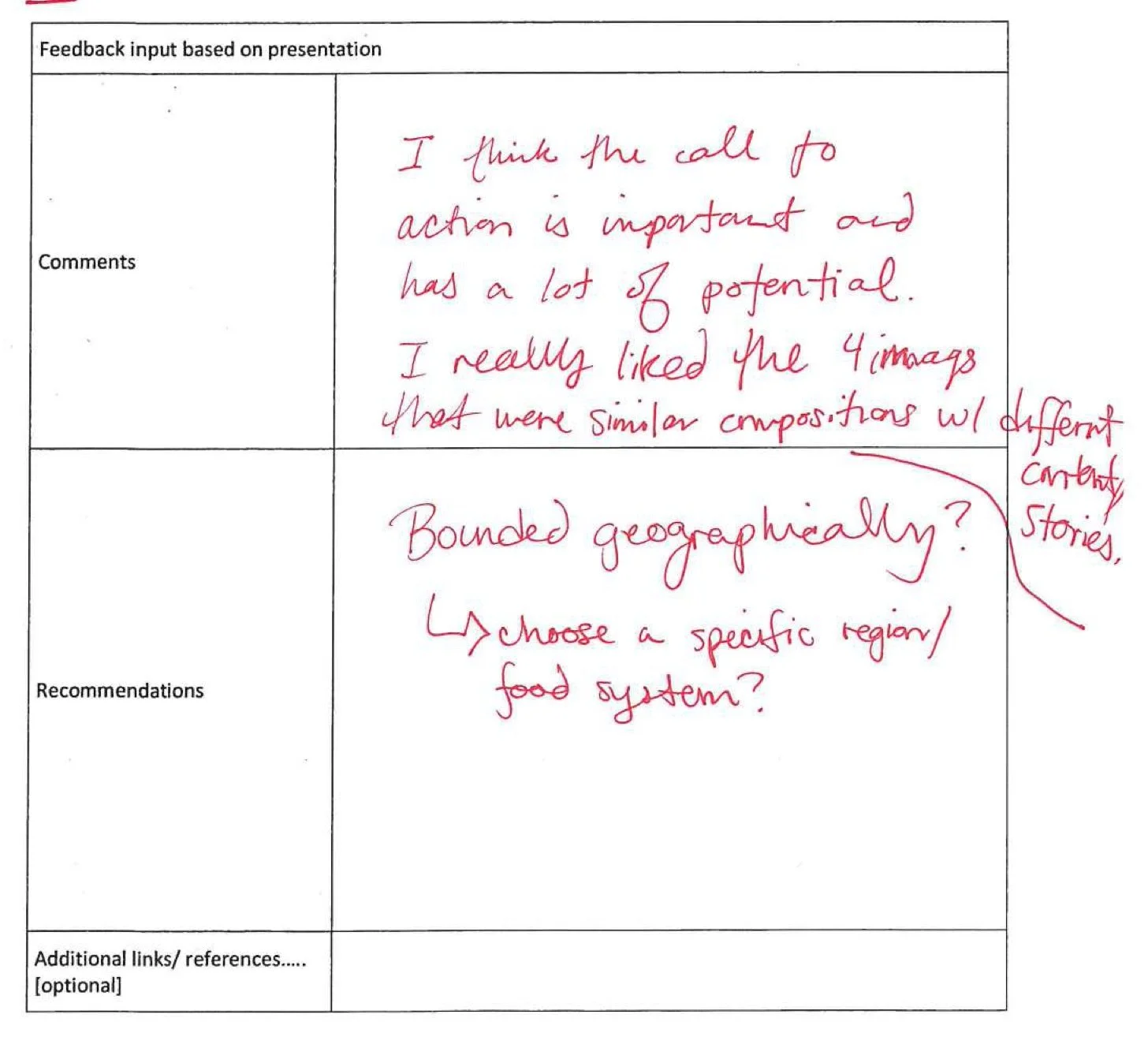

Group Session / Turin Nov’23

2 full time days with presentations & discussions. led by Carlo Ratti Associati team.

I structured my presentation with an intro to my relationship wiht AI tools and how they tend to the mainstreamification of content, and how this relates to the project.

For this project the use of AI is actually related to the averageness, to use a tool capable to communicate/condense concepts in the most transversal and understandable way for many types of audiences.

For each presentation each of the members took notes and added comments to a feedback form that was then shared.

Next steps

From the discussions and feedback the areas of further research are the following

this sounds like a tactical media project. (context)

potential polarising effects (desired?)

how to avoid eyerolling 🙄

limit the scope/effect of the output? geographically / sector / company ?

Thoughts

The problem with pointing things out: nobody cares.

I’m subscribed to multiple newsletters and communities around food, and I find myself deleting emails before reading them, same with climate change content, same with other issues… It is not about the information… it is not about the data.

Why Why Why?

When researching a topic, often all roads point to capitalism systemic economic failures. Food is no exception.

so, maybe instead following the thread (positive or negative) we can showcase that path, and communicate a narrative without overwhelming with data or pointing fingers and losing the audience half way… 🤔

Here is this bit by Louic CK, where he explains how kids keep asking “why?” endlessly. is fun, and it is also an Iterative Interrogative Technique, that made me want to try it out.

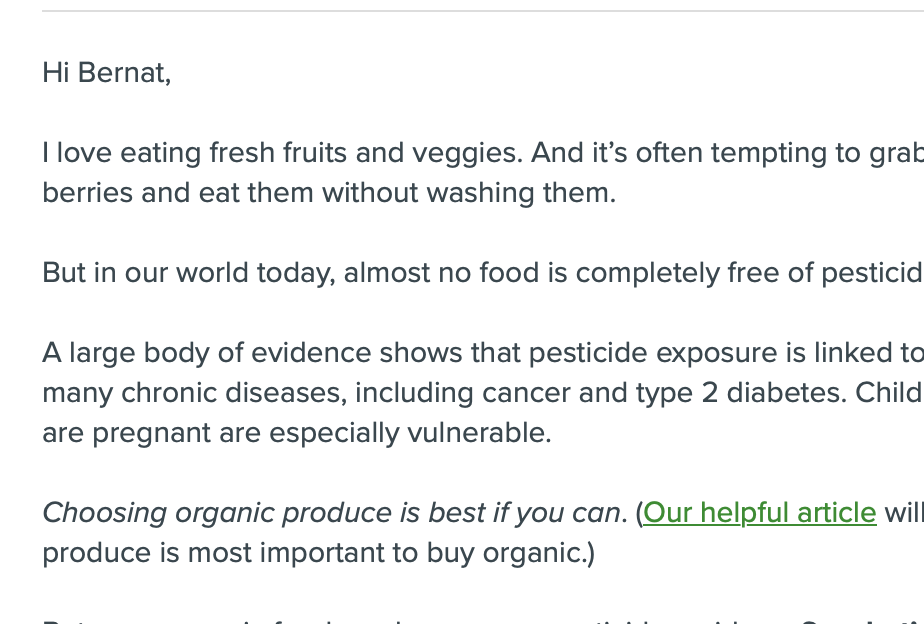

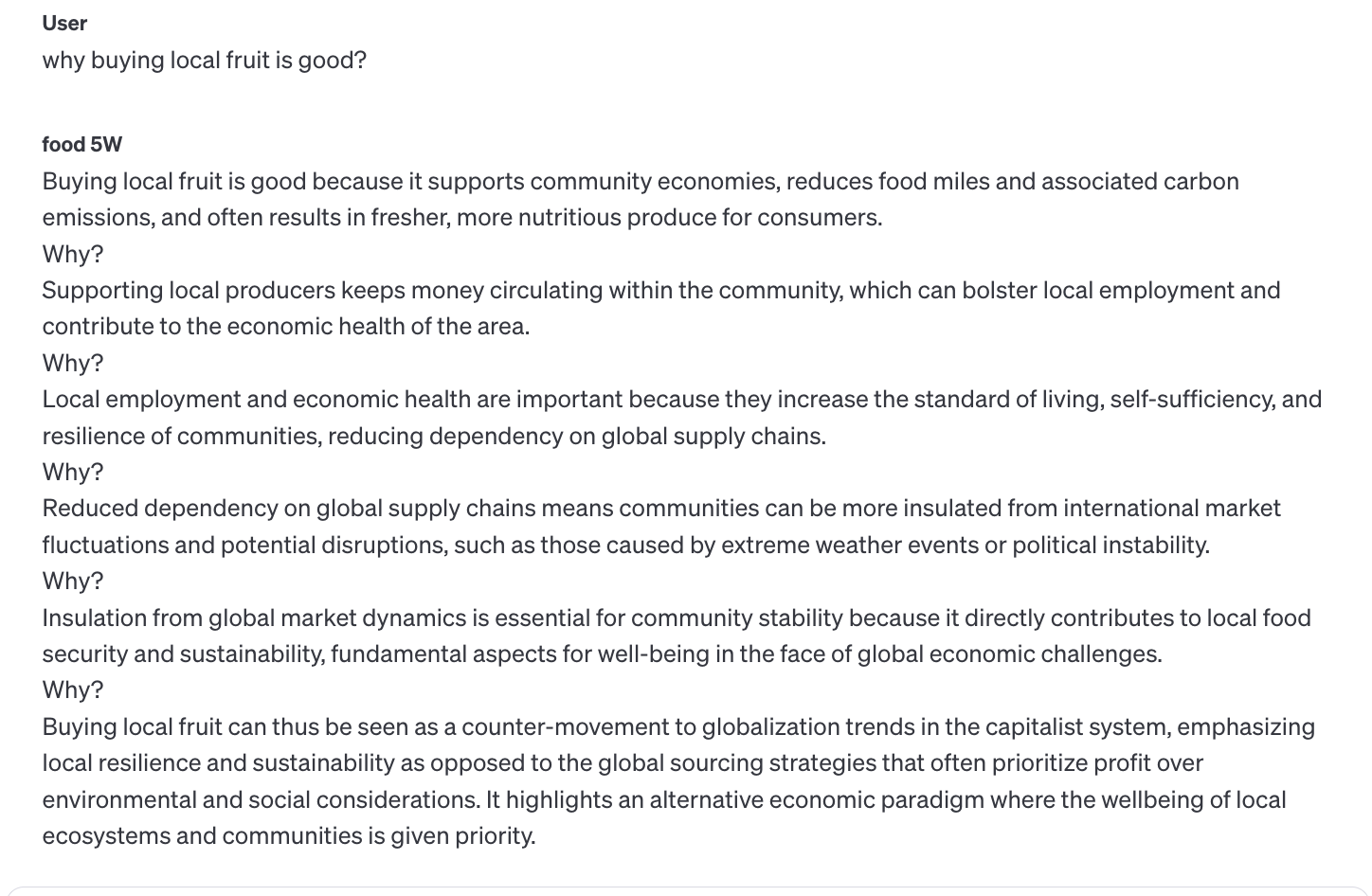

I instructed a language model to behave in such way, the “5Why” model, with a goal to link any given insight to economic reasoning.

And it works! and the best is that it works for positive and negatives scenarios as:

“why fruits are so beautiful at the supermarket?” gives a reasoning towards market preferences and how this influences the farmers

“why buying local is good?” explaining how this strentens comunities and makes them more resilient within the global economic context

The multi-dimensional map of inequalities

During the by-weekly discussions with the project partners, the question of why/when would someone use this often arises. And linking it with an early conversation with a behavioural media campaign publicist I met in Amsterdam in October, I explored the concept of “giving something to do to the user, a task”

The idea here is that each individual’s actions contributes to a greater result. As analogy, we can use an advent calendar , where each day you unlock something that gets you closer to the goal.

Also, since the project is about showing multiple narratives/realities of the food system that are behind every product, we must find a way to show that variability.

Also, some partners rise concerns that the project is too biased towards demonising corporate practices (which it somehow is), thus a way to tell their story needs to be contemplated.

Mixing all this up, we can now frame the project as a collaborative multi-dimensional exploration of socio economical inequalities in the industrial food system.

A way to explore a complex system with many actors, and many issues.

To do so, I propose looking at the food system as a volume that can be mapped to different axis to show specific intersections of issues. This by nature is very vast as it can contain as much granularity as desired. For prototyping purposes, three main axis are defined, and a forth would be each food that is run through the tool.

In one axis we can have the agents, and in the other the issues, and even a third with the stages of the food system.

In this way, the project has a potential end-goal, which is to map and uncover all the possible scenarios.

To test this approach, the following entries are selected:

This approach allow us to play with the concept of “volume of possibilities”.

Each “agent” needs to get their own “flavour” their voice. Because each agent looks at the issues from their own perspective.

For the final tool, the particularities of each “agent” and their subjectivity (“their story”) will be captured either with interviews and forms, via interface dials or with presets.

Each interaction ends with a call-to-action. Each agent could define a set of actions and the system would dance around them. ⚠️ Safeguarding setup needs to be implemented to prevent greenwashing/foodwashing, because a main goal of the project is to expose the socioeconomical issues in a friendly engaging un unapocalyptical and blameless manner.

Below, a test run with a 🍋, from a Permaculture local grower perspective focusing on local food culture:

Some other tests with 🥑 and 🍅

Technical development

Since mid October I’ve been scouting for a cool developer to help with the implementation,

and I’m happy to have connected with Ruben Gres

In late December we hosted an intensive work session in the studio to draft the architecture of the digital prototype:

The prototype will take the form of a mobile-friendly accessible website.

Initial development have been made to display a scrollable/draggable endless grid of images on the web.

The backend is running a ComfyUI with Stable Diffusion and a custom web interface collects the outputs.

A workflow to programatically select and mask a given food has also been tested with promising results.

We still need to solve the following technicalities:

integrate LLM generation to drive image creation

store and reference text and images

combine each generation into compelling videos

Next steps from jan-may 24

define the user experience

interaction with the “map” as a viewer

interaction with the “filter” as a generator user

define how to fine-tune each agent’s perspective to the prototype

interviews / dials / presets

develop and implement those decisions

design a workflow to create videos with messages

technical test the prototype in the wild (supermarket, home, printed media…)

stress-test the prototype for failures

re-conceptualizing

call-to-action -> links

The deep dive in technical development triggers some questions that allow for rethinking the conceptual framework.

For instance, the language model (GPT) has to be invoked several times to:

compose the issue (from a set of socioeconomic factors) from a perspective (depending from stakeholder)

create a visual description of that issue: to drive the image generation and morphing

create a call-to-action to propose solutions to the user.

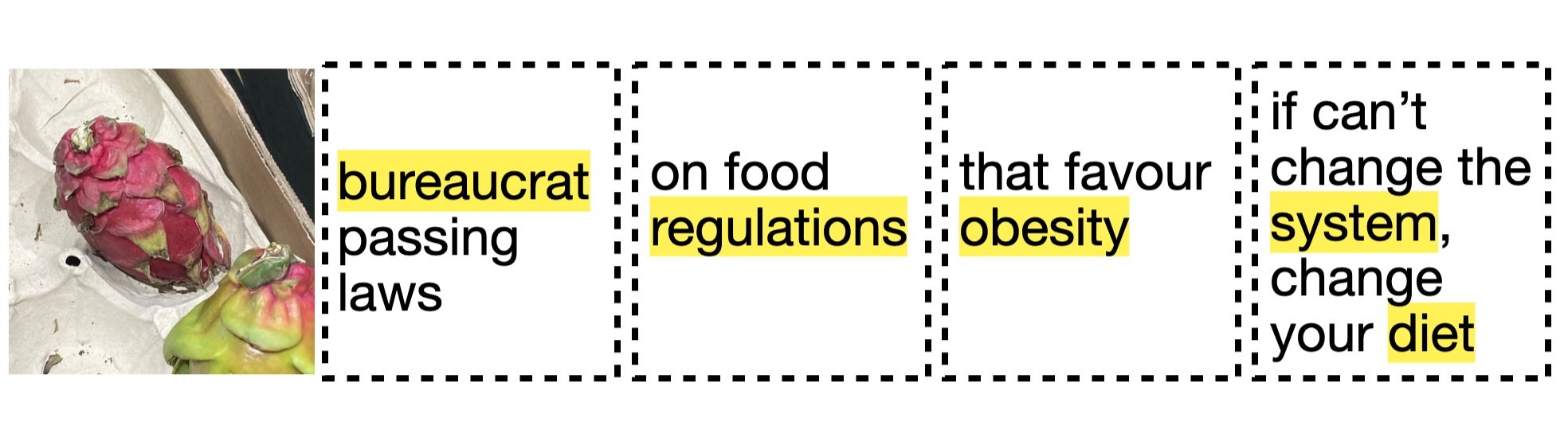

Using this approach we managed to obtain convincing call-to-actions as:

Reduce food deserts: Increase access to fresh foods.

Participate in food swaps: Share surplus with neighbors.

Demand fair trade avocados: Support small farmers and market competition.

Preserve food diversity: Choose heirloom watermelon varieties.

Choose community gardens: Cultivate accessible produce with neighbors.

As a proof of concept, a site was setup to collect early generations of text+imgs, but when seeing all generations at once, some emerge repeat and the call too action seem very repetitive, this is becasue the language model does not have memory of what has said previously, and often uses verbs as “choose”, “avoid”, “fight”…

I had to re-think the role call-to-action and experimented with explicit links for the viewer to take action.

The aim is to get recommendations of resources, associations, media related to the presented narrative.

This works, but often the presented links are the same, a high-level approach as “watch food.inc documentary” or “join the sloow-food movement”.

To add granularity to the generation, I try including location to the query, thus creating site-specific receommendations.

In some cases it surfaces very interesting content, as EU policies on food sovereignty or very specific local associations 👍

This is great because it allows us to go from a moment in reality, straight to a very specific piece of knowledge/data/action.

Results seem good, but sometimes the links are invented, as a Language Model is predictive, the most probable way a link starts is with http: and it often ends with .com or the locale variants. thus, the generated links look ok, but may not be real :(

Examples:

Zagreb, Croatia

Implement a transparent supply chain policy: https://mygfsi.com/

Educate staff and customers on sustainability: https://sustainablefoodtrust.org/

Support local farmers and producers: https://www.ifoam-eu.org/

Lisbon, Portugal

Join "Fruta Feia" to buy imperfect fruits and vegetables, reducing waste: https://www.frutafeia.pt/en

Volunteer with "Re-food" to help distribute surplus food to those in need: https://www.re-food.org/en

Support local markets like "Mercado de Campo de Ourique" for fresh, local produce: http://www.mercadodecampodeourique.pt/

Participate in community gardens, enhancing local food production: http://www.cm-lisboa.pt/en/living-in/environment/green-spaces

To mitigate this, a session with experts from BRNO University was conducted and a change on prompt-engineering design will be implemented, were instead of asking for links related to a content, we might extract content from links. The approach involves RAG Retrieval Augmentation Generation, and empowers the LLM with eyes to the internet.

multiple perspectives

As the whole project is to showcase the different realities of the food system existing behind every piece of food, we explore how to narrate those from different perspectives.

Initially we thought on having interviews or work-sessions with different stakeholders, but later we considered to explore the existing knowledge within a language model to extract the averaged points of view.

Initial perspectives are:

As a wealthy consumer, my purchasing power can influence the food system towards ethical practices by boycotting brands that consolidate power unfairly, mistreat workers, or contribute to unequal food distribution. I support local and cultural foods through patronage and invest in initiatives promoting sustainable economic models in the food industry.

As a supermarket chain manager, I prioritize ethical sourcing and fair labor practices to address power consolidation and workers' rights. We're enhancing food distribution to improve accessibility, supporting local economies to reduce reliance, and promoting local foods to preserve cultural variations. Our sector's efforts include partnerships with small producers and community initiatives.

As a Permaculture local grower, I champion decentralized food production, enhancing workers' rights through community-based projects and equitable labor practices. We improve food accessibility by fostering direct-to-consumer distribution channels, reducing economic dependence on industrial agriculture. Our approach preserves local food varieties, countering the homogenization driven by power consolidation within the industry.

As a CEA industrial grower, we recognize the complexities surrounding power consolidation and strive for equitable industry participation. We prioritize workers' rights, ensuring safe, fair conditions. Our technology improves food distribution/accessibility, reducing economic dependencies on traditional agriculture while preserving local/cultural foods through diverse crop production. We're committed to sustainable practices and solving systemic issues collaboratively.

The generated perspectives could be contrasted/validaded by stakeholders.

Digital prototype - dev

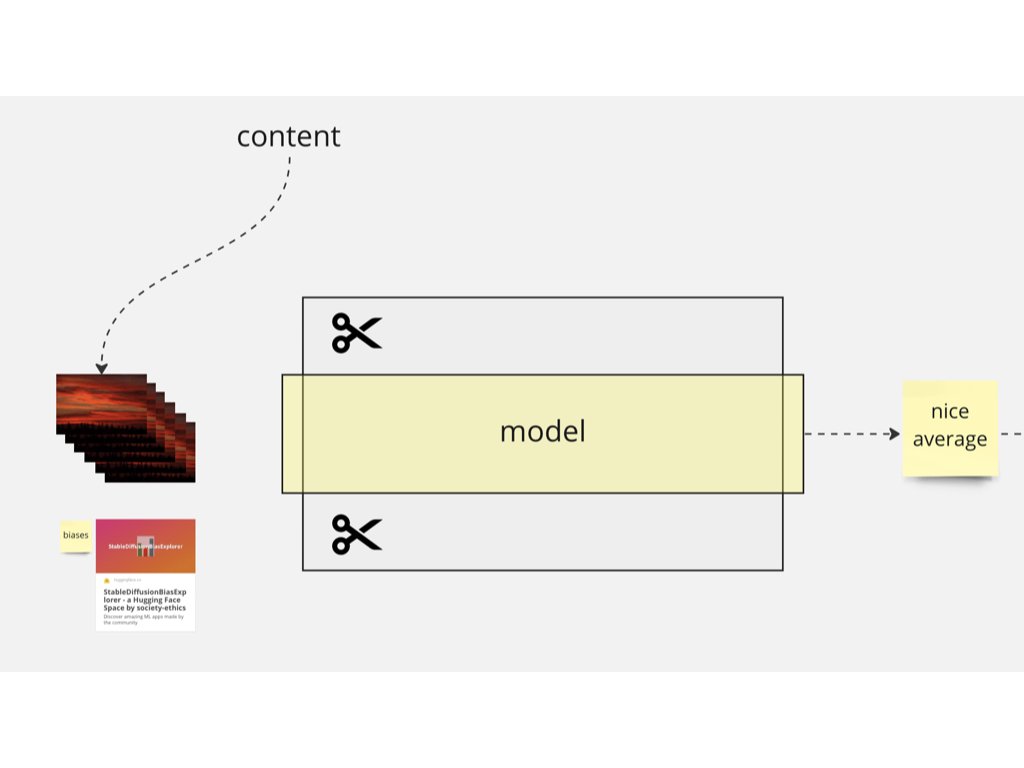

A clear workflow of tools and data is drafted, the prototype will be composed by two clear components:

The reality filter: a mobile-first interface to take a photo in context and experience a narrative of the food system through a morphing video

The perspective map: a navigational interface to sorting and grouping large amounts of media according to similarities

To experiment and develop the reality filter, I tinker with comfyui, whch is a frontend visual-coding framework to integrate multiple workflows related to text-to-image mainly with Stable Diffusion models.

The good side of this is that with this tool I can chain several image generation with conditional guidance processes to create the necessary scenes to compose the video. And I can also pipe some interpolation processes at the end.

For this workflow, ControlNet is key to carry visual similarities from frame to frame. Initially it has been proposed to use depth estimation, but tests proved to be too strong or too weak in different cases, thus experimenting was needed to find another approach.

Using the TILE model gives great results as it is a model normally used for super-resolution, it carries the visual qualities of the source image in the conditioning.

Scenes

Creating new images from starting ones has the following challenge: if the gap is too big, the visual connection is lost, but if the gap is small, we have very few pixel-space to tell a story. So If I want to bring the viewer to a new reality, I need to do it smoothly, and I can achieve this by incrementally decresing teh strength of the initial image in each generation step.

With 4 steps I can already go quite far.

Scene interpolation / video / morph

To craft a smooth visual narrative from the real-world photo to the generated food-system-scene the FILM: [Frame Interpolation for Large Motion] will be used, even if it is quite computationally expensive. There are other approaches, but I feel they break the magic and the mesmerizing effect of seeing something slowly morphing into something else without almost noticing it.

24 in-between-frames seem a good number to create a smooth transition from scene to scene

img2morph -> workflow

videos

samples of the resulting morphs

On the wild / testing

A key advancement has been to find a workflow that allows for experimentation and at the same time use the setup in the real world.

to do this, we use comfyUI as an API, and build a gradio app to be able to use it from a web interface.

Once we have this, we are able to pipe that interface outside the server and use it via a public link, allowing for testing in different devices and locations.

next (technical) steps

*The technical development is done in collaboration with Ruben Gres,. from now on, when I saw "we” I mean Ruben + Bernat

With all the bits and pieces working independently, now we need to glue it together in an accessible digital prototype (within time and resources constrain)

some design guidelines are:

zero friction

app must work without requesting permissions or security warnings

mobile first

the photo taking has to be intuitive and fast - 1 click away

desktop delight

the system should allow for “enjoyment” and discoverability

we are not alone

somehow find a way to give the user the sense that other people went through “this” (the discovery process)

…

Interface prototype

Sorting

Learning about dimensional reduction, I feel this can be a nice touch to use as navigation for the project.

the aim is to find a way to sort the content (media + text) per affinity, or according to non-strict-direct variables.

One approach is using TSNE T-distributed stochastic neighbor embedding (here a good interactive demo). This allows for sorting/grouping of entities according to their distance (embedding) to each other or to a reference (word, image…)

Some examples using this technique is this GoogleArts project of mapping artworks by similarity

We have been able to plot vegetables according to different axis, using CLIP embeddings

grid / navigation / upload / video generation

For the exploration map, an endless canvas with a grid will be used.

getting the right voice / perspective

Testing the whole text-generation pipeline

food -> agent -> issue -> context -> title -> links -> image representation

Relevant links

As the prototype evolves, the ability to provide contextual localized links to events, communities, policies and resources to the viewer becomes more relevant and interesting.

Using the language model, we get beautiful links but that in some cases are non-existing :(

This is an inherent quality of an AI Language Model, as those work mostly as predictive systems, where the most probable word is added after another in a context, thus it is very probable that a link starts as http://www., then include some theme-related words and most likely end as .com or .es .it... according to the location.

This disrupts the prototype, because action can't be taken by the user, and it is frustrating.

To overcome this, the whole structure of interaction with the language model has been changed, and we added eyes to the internet to it 👁️

Using LangChain, and Tavily API , we can request the LLM to search the internet (if instructed) and then analyze the content of the found links and use them as knowledge to build the output.

With this approach, now the language model does the following:

picks up a random stakeholder + issue + food (the photo provided by the user).

Builds up a story around this that hightlights the point of view of the stakeholder in relation to the issue.

Then uses that response coupled with the location of the user (the IP) to search the internet for events, associations or policies that answer a "what can I do about this" question.

The relevant links are rephrased as actionable tasks: "read this... join that... go there...".

Finally a nice engaging title is composed.

to add:

An european dimension : localized content Build for customization: open github repo + documentation + easy deployment (add tech demo video)…

This project is developed as part of the Hungry EcoCities S+T+ARTS Residency which has received funding from the European Union’s Horizon Europe research and innovation programme under grant agreement 101069990.